Key Takeaways

- GPT-4o voice mode enhances the natural feeling when speaking with ChatGPT.

- New features include faster response times and different voice tones.

- It will be rolled out to a small percentage of ChatGPT Plus subscribers first, with a wider release planned for the fall.

After a longer-than-expected wait, OpenAI’s Sam Altman said in a reply to X that GPT-4o’s new voice features will finally start rolling out starting next week, though this alpha release will initially be limited to a small percentage of ChatGPT Plus subscribers before a wider release in the fall.

In May, OpenAI showed off its new model, GPT-4o. The demo included some impressive new features, including the ability to respond to information from a real-time video feed and a new voice feature that makes a conversation with GPT-4o feel more like talking to a human. When GPT-4o was released, it didn’t have voice capabilities, but an in-app message indicated that a new voice mode feature was scheduled to roll out soon. Now, it looks like it’s finally starting to roll out.

Related

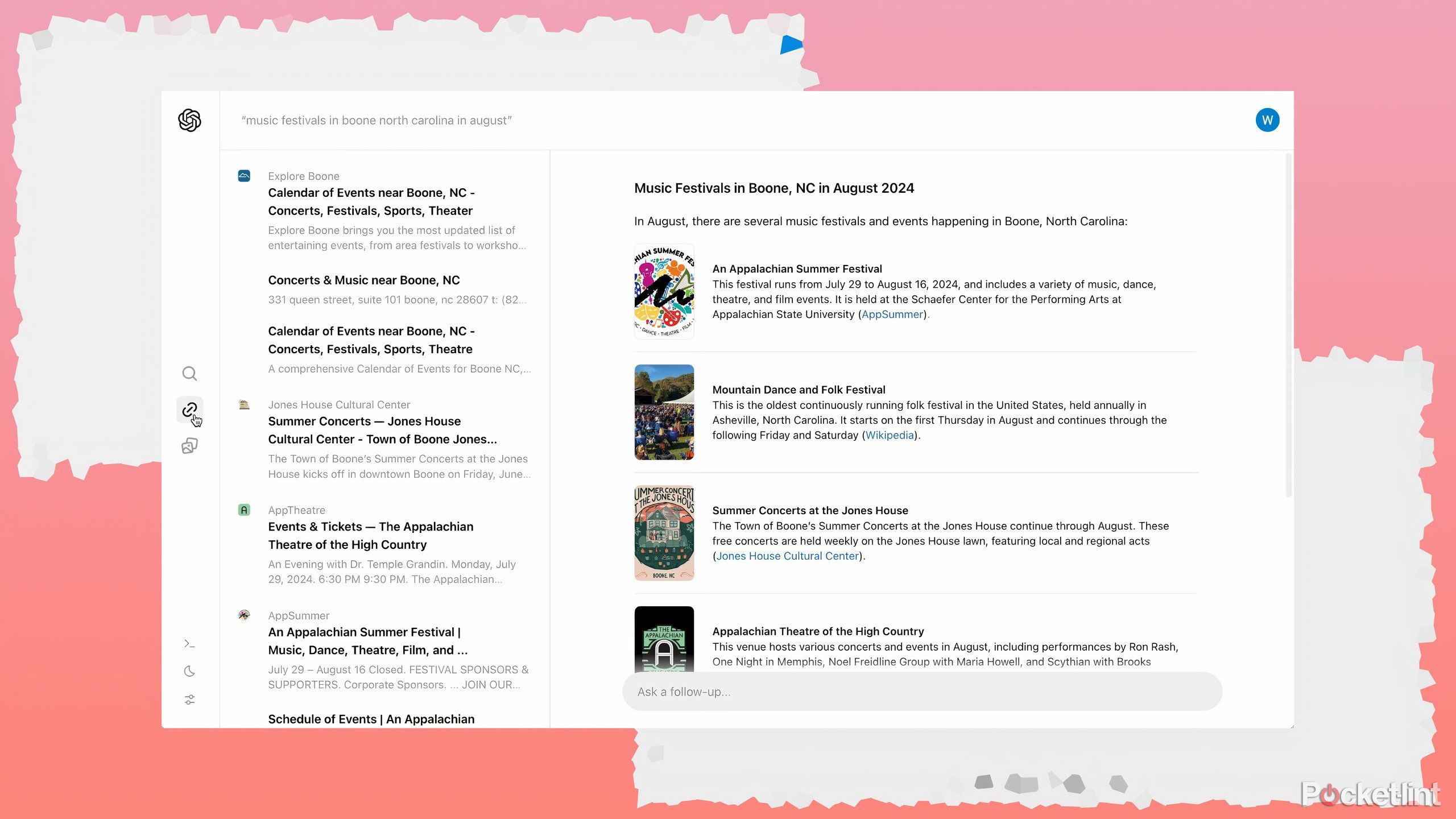

SearchGPT Explained: What is SearchGPT and How to Try It First

There have been rumors for some time that OpenAI is working on a competitor to Google Search, but now it’s finally happening.

GPT-4o Voice makes conversations with ChatGPT feel more natural.

The voice capabilities will be more powerful and come with some additional features.

Even before GPT-4o was released, it was possible to talk to GPT-4 in voice mode, but a major drawback was that the average latency of 5.4 seconds made it difficult to have a natural conversation: after speaking out loud, you had to see a thought bubble animation for a few seconds before getting a response.

The new GPT-4o voice mode reduces the average response time to just 320 ms, dropping it all the way to 232 ms. This gives you the feeling of having an instant conversation with GPT-4o. In the demo during the launch, the response was incredibly fast. You can also interrupt a response by simply speaking again. The voice response will stop and GPT-4o will start listening again.

If the actual features are as impressive as the demo, talking to GPT-4o will truly feel like talking to a real person.

But speed isn’t the only change: you can also make GPT-4o speak in different tones and styles of voice. In the demo video, you can see GPT-4o speaking in a sarcastic tone, talking like a sports anchor, counting to 10 at different speeds, and singing Happy Birthday. If the real-world features are as impressive as the demo, talking to GPT-4o will truly feel like talking to another person.

GPT-4o’s voice mode also allows for real-time translation: for example, one person can speak to GPT-4o in one language, and another person can speak to GPT-4o in a different language, and GPT-4o will repeat each phrase in the opposite language, allowing two people who don’t speak the same language to have a conversation.

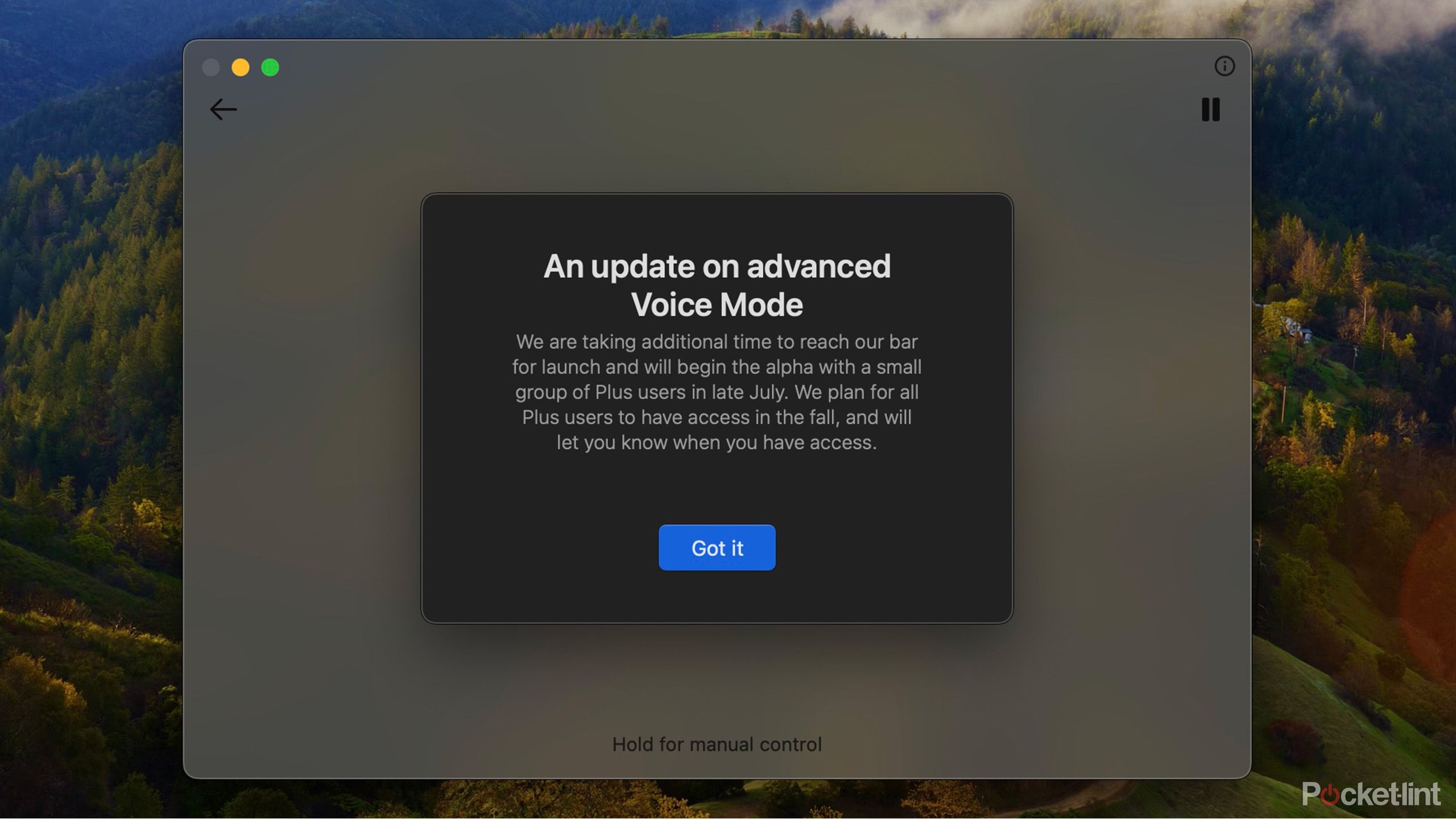

We’ll have to wait a little longer for GPT-4o’s voice mode.

New features will be released only to a small group of ChatGPT Plus users.

The first release of the new features has been a long time coming. OpenAI said in May that it would roll out “in the coming weeks,” but double-digit weeks have passed since the announcement. But the wait is almost over, at least for a small number of people. In addition to Sam Altman’s confirmation of X, a message within the ChatGPT app also states that Open AI will “launch an alpha for a small number of Plus users in late July.”

This small initial rollout means that even if you’re a ChatGPT Plus user, it’s highly unlikely that you’ll be able to access the new voice mode feature next week. However, the message also states that “access is expected to be available to all Plus users in the fall,” so the rest of us probably won’t have to wait too long. One thing’s for sure: when the new voice mode is released, it won’t make you sound anything like Scarlett Johansson.