This is a guest post co-authored with Tim Krause, the leader of Conxai’s Mlops Architect.

Conxai Technology GmbH pioneers the development of advanced AI platforms for the architecture, engineering and construction (AEC) industries. Our platform uses advanced AI to enable construction domain experts to efficiently create complex use cases.

Construction sites typically employ multiple CCTV cameras, generating huge amounts of visual data. These camera feeds can use AI to extract valuable insights. However, to comply with GDPR regulations, all individuals filmed on footage must be anonymized by masking or blurring their identity.

In this post, we will introduce AWS’ cutting-edge OneFormer segmentation using Amazon Simple Storage Service (Amazon S3), Amazon Elastic Kubernetes Service (Amazon EKS), Kserve, and Nvidia Triton. How Conxai hosts the model I’ll dig deeper.

AI solutions come in two formats:

- Model as a Service (MAAS) – AI models can be accessed through APIs, allowing for seamless integration. The price is based on a processing batch of 1,000 images, providing users with flexibility and scalability.

- Software as a Service (SaaS) – This option provides a user-friendly dashboard that acts as a central control panel. Users can add and manage new cameras, view footage, perform analytic searches, and automate anonymization and enforce GDPR compliance.

Our AI models are fine-tuned with a unique dataset of over 50,000 self-labeled images from construction sites, providing extremely high accuracy compared to other MAAS solutions. It has the ability to recognize over 40 specialized object classes, including cranes, excavators, and portable toilets. AI solutions are uniquely designed and optimized for the construction industry.

Our Journey to AWS

Initially, Conxai started out with a small cloud provider that specializes in providing affordable GPUs. However, there were no critical services required for machine learning (ML) applications, such as front-end and back-end infrastructure, DNS, load balancers, scaling, blob storage, and management databases. At the time, applications were deployed as a single monolithic container containing Kafka and databases. This setup was neither scalable nor maintenanceable.

After migrating to AWS, we accessed a robust ecosystem of services. Initially, we deployed an all-in-one AI container on a single Amazon Elastic Compute Cloud (Amazon EC2) instance. This provided a basic solution, but it is not scalable and requires the development of new architectures.

Our biggest reason to choose AWS was driven primarily by our team’s extensive experience with AWS. Furthermore, the first cloud credits provided by AWS were invaluable to us as a startup. Nowadays, you can use AWS managed services wherever possible, especially for data-related tasks, to minimize maintenance overhead and pay only for the resources you actually use.

At the same time, we aimed to continue to rely on the cloud. To achieve this, you can choose Kubernetes and deploy the stack directly to the customer’s edge, as in a construction site, if necessary. Some customers can be very limited compliance, so as not to allow data to leave the construction site. Another opportunity is to federated learning, training at the edge of the customer, and transfer only the weights of the model to the cloud without sensitive data. In the future, this approach could lead to fine-tuning one model for each camera to achieve the best accuracy. This requires hardware resources on-site. For now, I offloaded the administrative overhead to AWS using Amazon EK, but can easily be deployed to a standard Kubernetes cluster if necessary.

Previous models were running on Torchserve. I first tried to perform inference in Python using Flask and Pytorch using the new model. It was extremely difficult to achieve high inference throughput with high GPU utilization for cost-effectiveness. Exporting the model to ONNX format was particularly difficult because the OneFormer model doesn’t have strong community support. It took me a while to pinpoint why the OneFormer model is so slow in the ONNX runtime with Nvidia Triton. Finally, I solved the problem by converting Onnx to Tensort.

The final architecture definition, model training, and cost optimization took about 2-3 months. Now, we will improve our models by incorporating more and more accurate labeled data. This is the process of getting training for about 3-4 weeks on a single GPU. Deployment is fully automated with GitLab CI/CD pipelines, Terraforms and Helms, and takes less than an hour to complete with no downtime. Typically, new model versions are deployed in shadow mode for 1-2 weeks to provide stability and accuracy before full deployment.

Solution overview

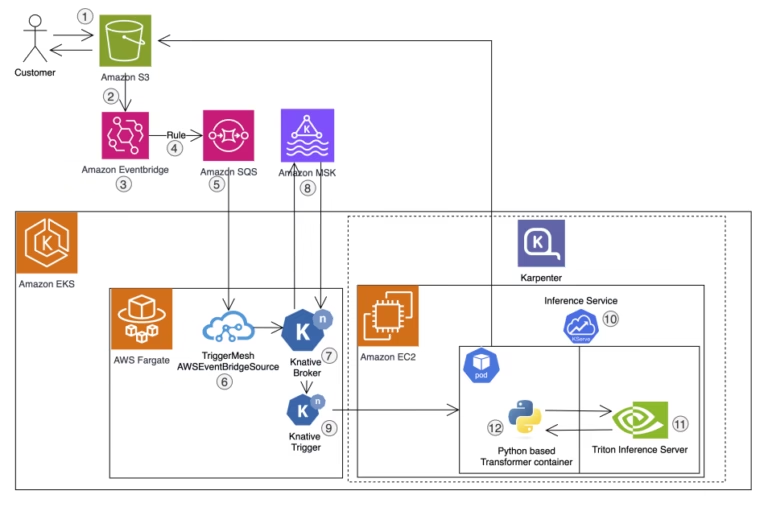

The following diagram illustrates the solution architecture.

The architecture consists of the following important components:

- The S3 bucket (1) is the most important data source. It is cost-effective, scalable and offers almost unlimited blob storage. Encrypt your S3 bucket and remove all data with privacy concerns after processing is done. Almost all microservices read and write files on Amazon S3, and ultimately trigger Amazon Eventbridge (3). This process begins when a customer uploads an image to Amazon S3 using the preprocessing URL provided by an API process that handles user authentication and authorization through Amazon Cognito.

- The S3 bucket is configured to (2) forward all events to the Eventbridge.

- Triggermesh is the Kubernetes controller we use

AWSEventBridgeSource(6). Abstract infrastructure automation and automatically create Amazon Simple Queue Service (Amazon SQS) (5) processing queues. Additionally, an Eventbridge rule (4) is created to forward S3 events from the event bus to the SQS processing queue. Finally, Triggermesh creates a Kubernetes pod to vote for the event from the processing queue and send it to the nightly broker (7). Resources in a Kubernetes cluster are deployed to a private subnet. - The central location of nighttime events is the night broker (7). It is supported by Amazon Managed streaming by Apache Kafka (Amazon MSK) (8).

- Night Trigger (9) votes for Night Broker based on its identity

CloudEventTypeTransfer to Kserve accordinglyInferenceService(10). - KSERVE is Kubernetes’ standard model inference platform, using Knative, which serves as its basis, and is fully compatible with nightly events. It also removes the need to pull models into containers before the model server is started from the model repository, eliminating the need to build new container images for each model version.

- KSERVE’s “Colocated Transformers and Predictors for Same Pod” feature to maximize inference speed and throughput, as containers within the same pod communicate through the local host and network traffic never leaves the CPU Use .

- After many performance tests, I first converted the model to ONNX and then Tensort, then achieved the best performance on the NVIDIA Triton Incerence Server (11).

- Our transformers (12) use flasks in Greenwich Cone, optimized for the number of workers and CPU cores to maintain GPU utilization over 90%. Transformer gets a

CloudEventBrowse to Image Amazon S3 Path, download it, and perform model inference over HTTP. After retrieving the results of the model, perform preprocessing and upload the final processed model results to Amazon S3. - Use Karpenter as the cluster autoscaler. Karpenter is responsible for scaling the inference component to handle the high user request load. Karpenter launches new EC2 instances when the system experiences an increase in demand. This allows the system to automatically scale up its computing resources to meet the increase in workload.

All this primarily divides the architecture into AWS managed data services and Kubernetes clusters.

- S3 buckets, Eventbridge, and SQS queues, and Amazon MSK are all fully managed services in AWS. This reduces data management efforts.

- I use Amazon EK for everything else. Triggermesh,

AWSEventBridgeSourceNight Broker, Night Trigger, Kserve with Python Transformer, and Triton Incerence Server are in the same EKS cluster with dedicated EC2 instances with GPUs. EKS clusters are completely stateless as they are only used for processing.

summary

Initially, with its own highly customized model, with a transition to AWS, improved architecture and the introduction of a new OneFormer model, Conxai provides customers with scalable, reliable and secure ML inference, and is able to provide construction sites with a range of We are proud to enable improvement and acceleration. Over 90% of GPU usage has been achieved, but the number of processing errors has dropped to near zero in recent months. One of the main design options was to separate the model from the preprocessing code and postprocessing code within the transformer. Using this technology stack I gained the ability to scale down to zero in Kubernetes using Kubernetes, but the scale-up time from the cold state is only 5-10 minutes, and the use of potential batch inference is the same It saves a lot of infrastructure costs. case.

The next important step is to use these model results in appropriate analysis and data science. These results also serve as data sources for generation AI features such as automatic report generation. Additionally, we would like to label more diverse images and train the models in additional structural domain classes as part of our continuous improvement process. We also work closely with AWS specialists to put the model into AWS guessing chipsets to make it more cost-effective.

For more information about servicing this solution, see the following resources:

About the author

Tim Kraus I am the lead MLOPS architect at CONXAI. When AI fills the infrastructure, he handles all his activities. He joined the company on his previous platforms, Kubernetes, Devops, and Big Data Knowledge, and trained LLMS from scratch.

Tim Kraus I am the lead MLOPS architect at CONXAI. When AI fills the infrastructure, he handles all his activities. He joined the company on his previous platforms, Kubernetes, Devops, and Big Data Knowledge, and trained LLMS from scratch.

Mehdi Yosofie He is AWS Solutions Architect, working with startup customers and leveraging his expertise to help startup customers design workloads on AWS.

Mehdi Yosofie He is AWS Solutions Architect, working with startup customers and leveraging his expertise to help startup customers design workloads on AWS.