As generative artificial intelligence (AI) continues to revolutionize every industry, the importance of effective prompt optimization through prompt engineering techniques is key to efficiently balance output quality, response time, and cost. Prompt engineering is the selection of appropriate words, phrases, sentences, punctuation, and delimiters to create and optimize inputs to models to effectively use foundational models (FMs) or large language models (LLMs) for various applications. High-quality prompts maximize the chances of getting the right response from a generative AI model.

A fundamental part of the optimization process is evaluation, and evaluating a generative AI application involves multiple elements. In addition to the most common evaluation of FMs, evaluating prompts is a critical, yet often challenging, aspect of developing high-quality AI-powered solutions. Many organizations struggle to consistently create and effectively evaluate prompts across different applications, resulting in inconsistent performance and user experience, and undesirable responses from the model.

In this post, we show how you can use Amazon Bedrock to implement an automated prompt evaluation system to streamline your prompt development process and improve the overall quality of your AI-generated content by using Amazon Bedrock Prompt Management and Amazon Bedrock Prompt Flows to systematically evaluate prompts at scale for your generative AI application.

The importance of rapid evaluation

Before we dive into the technical implementation, let’s briefly discuss why prompt evaluation is important. The key aspects to consider when building and optimizing a prompt are usually:

- quality assurance – Evaluating prompts ensures that your AI application produces consistently high-quality and relevant output for the model you select.

- Performance optimization – Identifying and refining effective prompts can improve the overall performance of generative AI models in terms of reducing latency and ultimately increasing throughput.

- Cost-effective – Better prompts can lead to more efficient use of AI resources and potentially reduce the costs associated with model inference. Good prompts allow the use of smaller, less expensive models, but poor prompts produce poor results.

- User Experience – Improved prompts mean that AI-generated content will be more accurate, providing more personalized and helpful information, improving the end-user experience in your application.

Optimizing prompts for these aspects is an iterative process that requires evaluation to drive prompt adjustments. In other words, it is a way to understand how well a particular prompt-model combination works in getting the desired answer.

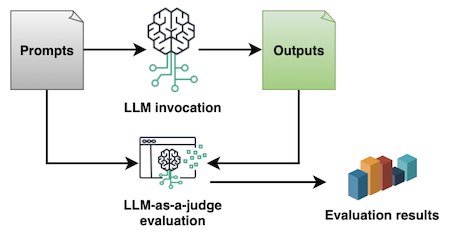

In this example, you would implement the following methods: Master of Laws as a JudgeIn LLMs, LLMs are used to evaluate prompts according to predefined criteria based on the answers generated by a particular model. While evaluating prompts and their answers for a particular LLM is an inherently subjective task, systematic prompt evaluation using LLMs as the criterion allows for the evaluation to be quantified using a numeric score evaluation metric. This allows for standardization and automation of the prompt lifecycle within an organization, which is one of the reasons why this method is one of the most common prompt evaluation approaches in the industry.

Let’s take a look at a sample solution for assessing prompts with LLM as an assessor in Amazon Bedrock. The complete code example can also be found in amazon-bedrock-samples.

Prerequisites

For this example, you need:

Configure the assessment prompt

To create an assessment prompt using Amazon Bedrock prompt management, follow these steps:

- In the Amazon Bedrock console navigation pane, Rapid Management And choose Create a prompt.

- Please enter name Example prompt

prompt-evaluatorand explanation “Prompt template for assessing rapid responses with LLM as examiner,” etc. Create.

- So prompt Write your prompt assessment template in the field. For example, you can use a template like the one below, or adapt it according to your specific assessment requirements:

- under compositionyou’ll be prompted to select the model you want to use to run the assessment. In this example, we selected Anthropic Claude Sonnet. The quality of the assessment depends on the model you select in this step. When deciding, try to balance quality, response time, and cost.

- Set up Inference parameters For the model, temperature Give it a factual rating and give it a 0 to avoid hallucinations.

You can test your assessment prompts using sample inputs and outputs. Test Variables and Test Window panel.

- Now that you have a draft of your prompt, you can also create a version. Versions allow you to quickly switch between different configurations of your prompt and update your application with the version that best suits your use case. To create a version, Create a Version At the top.

The following screenshot shows: Prompt Builder page.

Set up the evaluation flow

Next, you need to build an evaluation flow using the Amazon Bedrock Prompt Flow. In this example, we will use the Prompt Node. Check the Prompt Flow Node Types documentation for more information on supported node types. To build an evaluation flow, follow these steps:

- Amazon Bedrock Console Prompt Flowchoose Create a prompt flow.

- Please enter name Like

prompt-eval-flowPlease enter explanation “Prompt flow for LLMs to evaluate prompts as examiners,” etc. Use an existing service role Select a role from the dropdown. Create. - This means: Prompt Flow BuilderDrag the two prompt Add a node to the canvas and configure it according to the following parameters:

- Flow Input

- output:

- name:

documenttype: string

- name:

- output:

- Call (prompt)

- Node Name:

Invoke - Defined on node

- Select a model: The preferred model to evaluate at the prompt

- message:

{{input}} - Inference structure: As you like

- input:

- name:

inputtype: string, expression:$.data

- name:

- output:

- name:

modelCompletiontype: string

- name:

- Node Name:

- Evaluation (prompt)

- Node Name:

Evaluate - Use prompts from Prompt Management

- prompt:

prompt-evaluator - Version: Version 1 (or your preferred version)

- Select Model: The preferred model for evaluating the prompt.

- Inference configuration: As configured in the prompt

- input:

- name:

inputtype: string, expression:$.data - name:

outputtype: string, expression:$.data

- name:

- output

- name:

modelCompletiontype: string

- name:

- Node Name:

- Flow Output

- Node Name:

End - input:

- name:

documenttype: string, expression:$.data

- name:

- Node Name:

- Flow Input

- To connect the nodes, drag the connection dots as shown in the following image.

To test the prompt evaluation flow, Test Prompt Flow Panel. You pass in input, such as a question like “What is your one-paragraph description of cloud computing?”, and it returns a JSON with the evaluation results, similar to the following example: In the code example notebook amazon-bedrock-samples, we’ve also included information about the model used for the invocation and evaluation in the resulting JSON.

As the example shows, we asked FMs to rate the prompt and the answers they generated from that prompt with separate scores. We asked them to provide justification for their scores and recommendations for further improving the prompt. All this information is valuable to prompt engineers, as it helps guide optimization experiments and make informed decisions during the prompt’s lifecycle.

Rapid evaluation and implementation at scale

So far, we have shown how to evaluate a single prompt. Mid-to-large organizations often deal with dozens, hundreds, or even thousands of prompt variations across multiple applications, creating a great opportunity for automation at scale. For this, you can run your flow on a complete dataset of prompts stored in a file, as shown in the sample notebook.

Alternatively, you can take advantage of other node types in Amazon Bedrock prompt flows to read and store Amazon Simple Storage Service (Amazon S3) files and implement an iterator and collector-based flow. The following diagram illustrates this type of flow. Once you have established a file-based mechanism for running prompt evaluation flows on large datasets, you can also connect it to your preferred continuous integration and continuous development (CI/CD) tools to automate the entire process. These details are beyond the scope of this article.

Best Practices and Recommendations

Based on our evaluation process, here are some best practices for improving prompts:

- Iterative Improvement – Use evaluation feedback to continually improve your prompts. Prompt optimization is ultimately an iterative process.

- Context matters – Ensure that your prompts provide enough context for your AI model to generate an accurate response. Depending on the complexity of the task or question that the prompt answers, you might need to use different prompt engineering techniques. You can review the prompt engineering guidelines in the Amazon Bedrock documentation and other resources on the topic provided by your model provider.

- Specificity matters – Be as specific as possible with your prompts and evaluation criteria. Specificity will guide the model to the desired output.

- Testing edge cases – Evaluate prompts with different inputs to validate robustness. You can also run multiple evaluations on the same prompt to compare and test for consistency of output, which may be important depending on your use case.

Conclusion and next steps

Using LLM with Amazon Bedrock Prompt Management and Amazon Bedrock Prompt Flows as judges, you can implement a systematic approach to evaluating and optimizing prompts, which not only improves the quality and consistency of your AI-generated content, but also streamlines the development process, reduces costs, and potentially improves the user experience.

We encourage you to explore these features in more depth and tailor the evaluation process to your specific use case. As you continue to refine your prompts, you will unlock the full potential of generative AI in your applications. To get started, check out the full code sample used in this post. We look forward to seeing how you use these tools to enhance your AI-powered solutions.

To learn more about Amazon Bedrock and its capabilities, see the Amazon Bedrock documentation.

About the Author

Antonio Rodriguez He is a Senior Generative AI Specialist Solutions Architect at Amazon Web Services, helping companies of all sizes solve challenges, drive innovation, and create new business opportunities using Amazon Bedrock. Outside of work, he loves spending time with his family and playing sports with friends.