This post was co-authored by Joe Clark of Domo.

Data insights are essential for businesses to make data-driven decisions, identify trends and optimize operations. Traditionally, gaining these insights required skilled analysts using specialized tools, making the process time-consuming and difficult to access.

Generative artificial intelligence (AI) is revolutionizing this by allowing users to interact with their data through natural language queries, providing instant insights and visualizations without the need for technical expertise, democratizing access to data and accelerating analysis.

However, businesses can face challenges when using generative AI for data analysis, including maintaining data quality, addressing privacy concerns, managing bias in models, and integrating AI systems with existing workflows.

Domo is an innovator of cloud-centric data experiences that empower people to make data-driven decisions. Powered by AI and data science, Domo’s user-friendly dashboards and apps make data actionable to drive exponential business impact. Domo connects, transforms, visualizes and automates data through simple integrations and intelligent automation to power your entire data journey.

In this post, we discuss how Domo uses Amazon Bedrock to deliver flexible and powerful AI solutions.

What purpose does Domo use generative AI for?

The Domo Enterprise Data Environment serves a diverse customer base with different data-driven requirements. Domo works with organizations that are focused on deriving actionable insights from their data assets. Domo’s existing solutions enable these organizations to derive valuable insights through data visualization and analysis. The next step is to provide a more intuitive, conversational interface to interact with data, enabling them to generate meaningful visualizations and reports through natural language interactions.

Domo.AI Powered by Amazon Bedrock

Domo.AI simplifies data exploration and analysis by intelligently guiding you through everything from data preparation to prediction to automation, delivered through natural language conversations, contextual, personalized insights with narrative and visual responses, and robust security and governance for a guided risk management experience.

Domo’s AI Service Layer is the foundation of the Domo.AI experience. Domo is using the Domo AI Service Layer with Amazon Bedrock to provide flexible and powerful AI solutions to its customers. The AI Service Layer allows Domo to switch between different models offered by Amazon Bedrock for individual tasks and track performance across key metrics such as accuracy, latency, and cost. This allows Domo to optimize model performance through rapid engineering, pre-processing, and post-processing, and to provide contextual information and examples to AI systems. The AI Service Layer’s integration with Amazon Bedrock enables Domo to provide its customers with the tools they need to leverage AI across their organizations, from data exploration using natural language driven AI chat to custom applications and automations leveraging different AI models.

Amazon Bedrock is a fully managed service that offers a choice of high-performance foundational models (FMs) from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a wide range of capabilities for building generative AI applications with security, privacy, and responsible AI. With Amazon Bedrock, you can quickly try and evaluate the FMs that best suit your use case, customize them privately with your data using techniques such as fine-tuning and Retrieval Augmented Generation (RAG), and build agents that orchestrate tasks with enterprise systems and data sources. Amazon Bedrock is serverless, so there is no need to manage infrastructure, and you can securely integrate and deploy generative AI capabilities into your applications using familiar AWS services.

Solution overview

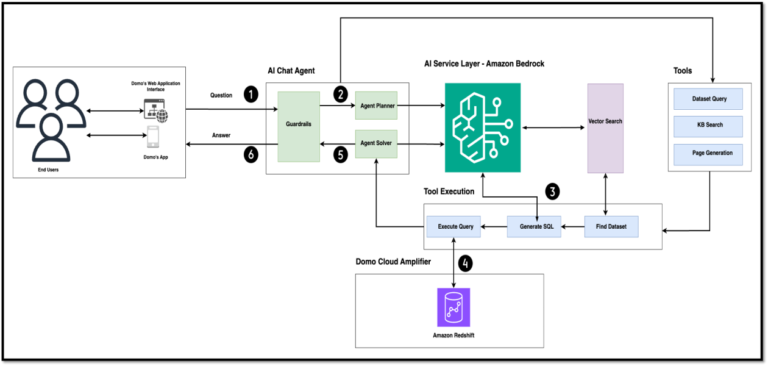

The following diagram shows the solution architecture and data flows.

The workflow includes the following steps:

- End-users interact with Domo.AI through the website or mobile app. End-user requests first go through the AI chat agent, which uses the power of large-scale language models (LLMs) to interpret user input, uses available tools to determine how to resolve the user’s question or request, and crafts a final response. The request goes through guardrails. Guardrails are mechanisms and strategies that enforce responsible, ethical, and safe use of AI models. They ensure that responses generated by the AI chat agent comply with an organization’s responsible AI policies and do not contain inappropriate or harmful content. Domo uses custom business logic to implement safeguards in its generative AI applications that are customized to the customer’s use case and responsible AI policies.

- The agent planner component is responsible for orchestrating the various tasks required to fulfill an end-user request. It calls Amazon Bedrock services via APIs to create an execution plan, which includes selecting the appropriate tools and models to get relevant information or perform custom actions. Tools refer to the different functions or actions that an AI chat agent can use to gather information and perform tasks. Tools provide agents with access to data and capabilities beyond what is available in the underlying LLM.

- The tool execution component is the process of invoking selected tools and integrating their outputs to generate a final response, allowing the agent to go beyond the knowledge contained in the LLM to incorporate up-to-date information or perform domain-specific operations.

- When the tool runs, it uses user input to find semantically related information using vector search or to query private data from sources like Amazon Redshift using Domo Cloud Amplifier, a native integration with cross-cloud systems that unlocks data products at the speed businesses need. Vector search is a technique used to find semantically related information from unstructured data sources like knowledge base articles and other documents. By creating vector embeddings of content, AI chat agents can efficiently search and retrieve the information most relevant to a user’s query, even when the exact phrase isn’t present in the source material.

- Information such as search and query results from step 3 are returned to the AI chat agent, and the agent solver component aggregates the results to create a final response, or for more complex queries, the agent runs another tool.

- Each component of the solution uses the Domo AI services layer, powered by Amazon Bedrock, to provide planning and reasoning capabilities, to translate user questions into SQL queries or create embeddings for vector search, and to return results of natural language answers to user questions based on private customer data.

The following video from Domo.AI provides a detailed overview of the product’s key features and capabilities:

Why Domo Chose Amazon Bedrock

Domo chose Amazon Bedrock because of the following benefits and features:

- Model Selection – Amazon Bedrock provides Domo with access to a wide range of models, including best-in-class options and models from a variety of providers, including Anthropic, AI21 Labs, Cohere, Meta, and Stability AI. This diversity enables Domo to thoroughly test its service with a variety of models and choose the best option for each specific use case. As a result, Domo can leverage this flexibility in model selection and experimentation to accelerate its development process and deliver value to customers more quickly.

- Security, Compliance and Global Infrastructure Amazon Bedrock addresses security and compliance concerns that are important to Domo and its customers. With Amazon Bedrock, Domo ensures that data remains within its AWS hosting environment and prevents model providers from accessing or training on customer data. With encryption in transit and at rest and access restrictions to model deployment accounts, Amazon Bedrock provides robust data protection. Additionally, Domo has implemented multiple guardrails with different combinations of controls to suit different applications and use cases. Amazon Bedrock provides a single API for inference and facilitates secure communication between users and FMs. Additionally, Amazon Bedrock’s global infrastructure and compliance capabilities enable Domo to scale and deploy generative AI applications worldwide while complying with data privacy laws and best practices.

- Fee – By using Amazon Bedrock, Domo achieved significant cost savings, reporting a 50% reduction compared to similar models from other providers. Serverless access to high-quality LLMs eliminates the need for large up-front infrastructure investments typically associated with LLMs. This cost-effective approach allows Domo to try and test different models without incurring the significant expenses typically associated with implementing and maintaining LLMs, optimizing resource allocation and improving overall operational efficiency.

In the following video, Joe Clark, Software Architect at Domo, talks about how AWS has helped Domo in the area of generative AI.

Get started with Amazon Bedrock

Amazon Bedrock enables teams and individuals to get started with FM immediately without having to worry about provisioning infrastructure or setting up and configuring ML frameworks.

Before you begin, be sure that your user or role has permissions to create or modify Amazon Bedrock resources. For more information, see Identity-Based Policy Examples for Amazon Bedrock.

To access the Amazon Bedrock models, go to the Amazon Bedrock console: Model Access In the navigation pane, review the EULA and enable the required FM for your account.

You can start interacting with FM in the following ways:

Conclusion

Amazon Bedrock helps Domo power its data insights and visualization capabilities through generative AI. By providing flexibility in FM choice, secure access, and a fully managed experience, Amazon Bedrock has enabled Domo to deliver more value to customers while reducing costs. The service’s security and compliance features have enabled Domo to serve customers in highly regulated industries. Using Amazon Bedrock, Domo has reduced costs by 50% compared to similar performance models from other providers.

If you are ready to build your own FM innovations with Amazon Bedrock, see Getting Started with Amazon Bedrock. To learn more about other interesting Amazon Bedrock applications, see the Amazon Bedrock section of the AWS Machine Learning blog.

About the Author

Joe Clark He is a Software Architect on the Domo Labs team and is the lead architect for Domo’s AI Services Layer, AI Chat, and Model Management. At Domo, he has also led the development of features like Jupyter Workspaces, Sandboxes, Code Engine, etc. He has 15 years of professional software development experience, previously working on IoT and Smart City initiatives.

Joe Clark He is a Software Architect on the Domo Labs team and is the lead architect for Domo’s AI Services Layer, AI Chat, and Model Management. At Domo, he has also led the development of features like Jupyter Workspaces, Sandboxes, Code Engine, etc. He has 15 years of professional software development experience, previously working on IoT and Smart City initiatives.

Aman Tiwari is a General Solutions Architect working with Independent Software Vendors in the Data and Generative AI space at AWS. He helps them design innovative, resilient, and cost-effective solutions using AWS services. He holds a Master’s degree in Telecommunications Networking from Northeastern University. Outside of work, he enjoys playing lawn tennis and reading.

Aman Tiwari is a General Solutions Architect working with Independent Software Vendors in the Data and Generative AI space at AWS. He helps them design innovative, resilient, and cost-effective solutions using AWS services. He holds a Master’s degree in Telecommunications Networking from Northeastern University. Outside of work, he enjoys playing lawn tennis and reading.

Sindhu Jambhunathan is a Senior Solutions Architect at AWS, specializing in supporting ISV customers in the Data and Generative AI space to build scalable, reliable, secure, and cost-effective solutions on AWS. With over 13 years of industry experience, she joined AWS in May 2021 after serving as a Senior Software Engineer at Microsoft. Sindhu’s diverse background includes engineering positions at Qualcomm and Rockwell Collins, and she holds an MS in Computer Engineering from the University of Florida. Her technical expertise is balanced with her passion for culinary exploration, travel, and outdoor activities.

Sindhu Jambhunathan is a Senior Solutions Architect at AWS, specializing in supporting ISV customers in the Data and Generative AI space to build scalable, reliable, secure, and cost-effective solutions on AWS. With over 13 years of industry experience, she joined AWS in May 2021 after serving as a Senior Software Engineer at Microsoft. Sindhu’s diverse background includes engineering positions at Qualcomm and Rockwell Collins, and she holds an MS in Computer Engineering from the University of Florida. Her technical expertise is balanced with her passion for culinary exploration, travel, and outdoor activities.

Mohammed Tahsin I am an AI/ML Specialist Solutions Architect at Amazon Web Services. I enjoy staying up to date with the latest technologies in AI/ML and helping customers deploy custom solutions on AWS. Outside of work, I love gaming, digital art, and cooking.

Mohammed Tahsin I am an AI/ML Specialist Solutions Architect at Amazon Web Services. I enjoy staying up to date with the latest technologies in AI/ML and helping customers deploy custom solutions on AWS. Outside of work, I love gaming, digital art, and cooking.