This post is co-authored with Javier Beltrán, Ornela Xhelili and Prasidh Chhabri from AETION.

It is important for healthcare decision makers to gain a comprehensive understanding of patient journeys and health outcomes over time. Scientists, epidemiologists and biostatisticians implement a vast range of queries to capture complex, clinically relevant patient variables from real data. These variables often include complex sequences of events, combinations of occurrence and non-birth, and detailed numerical calculations or classification that accurately reflect the diverse nature of the patient’s experience and medical history. By representing these variables as natural language queries, users can express scientific intent and explore the complete complexity of a patient’s timeline.

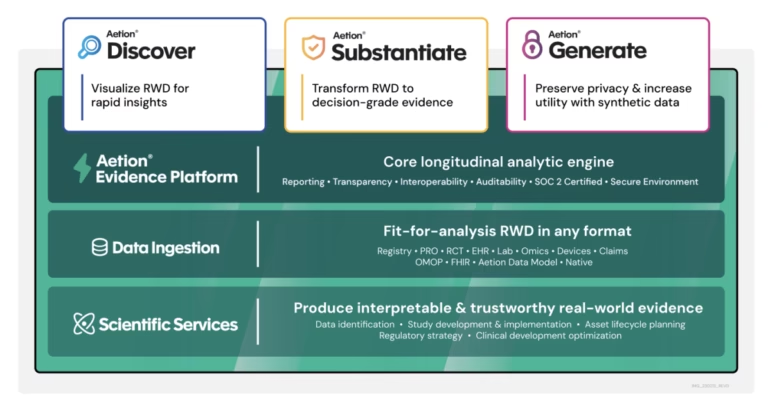

ATION is a leading provider of decision-making grade real-world evidence software for biopharma, payers and regulatory agencies. The company provides comprehensive solutions to its healthcare and life sciences customers, quickly and transparently converting real data into real-world evidence.

The core of the AETION Evidence Platform (AEP) is the measurements. It is a logical building block used to flexibly capture complex patient variables, allowing scientists to customize their analysis to address the nuances and challenges presented by research questions. AEP users can use the means to construct cohorts of patients and analyze outcomes and characteristics.

Users who ask scientific questions aim to translate scientific intent, such as “I want to find a patient diagnosed with diabetes and subsequent metformin filling.” To facilitate this translation, ADY has developed a measurement assistant to transform natural language representations of users of scientific intent into a measure.

In this post, we will show you how ATION can use Amazon Bedrock to streamline the analytics process to create decision-grade real-world evidence, allowing data science expertise to interact with complex real-world datasets Check.

Amazon Bedrock is a fully managed service that provides access to leading AI startups and high performance basic models (FMS) from Amazon through a unified API. It offers a wide range of FMS, allowing you to choose the best model for your particular use case.

ATION technology

AETION is a healthcare software and services company that uses the science of causal inference to generate real-world evidence on the safety, efficacy and value of drug and clinical interventions. ATION is partnering with the majority of the top 20 biopharmas, key payers and regulators.

ADY brings deep scientific expertise and technology to life sciences, regulatory agencies (including FDA and EMA), payers, and health technology assessment (HTA) customers in the US, Canada, Europe and Japan.

- Optimize clinical trials by identifying target populations, creating external control arms, and contextualizing underestimated settings and populations in controlled settings

- Expand industry access through label changes, pricing, coverage, and formula decisions

- Conduct safety and efficacy studies of drug, treatment and diagnosis

The ATION application, including discovery and demonstration, is powered by AEP, a core longitudinal analysis engine that can apply rigorous causal inference and statistical methods to the journey of hundreds of millions of patients.

ADeation’s Generation AI capabilities are embedded throughout AEP and applications. The Measurement Assistant is a proven aedionai feature.

The following diagram shows the organization of AETION services.

Measurement Assistant

Users construct ADY analytics to demonstrate that they transform real-world evidence of decision-making grades. The first step is to capture patient variables from actual data. As shown in the following screenshot, the demonstration offers a wide range of measures. Measurements can often be chained to capture complex variables.

Suppose the user is assessing the cost-effectiveness of the treatment and helping to negotiate drug compensation with the payer. The first step in this analysis is to filter out any negative cost values that may appear in the claims data. Users can ask how to implement this, as shown in the following screenshot:

In another scenario, users may want to define the results of the analysis as more changes in hemoglobin than in successive lab tests after the start of treatment. Users will measure and receive instructions on how to implement this by measuring questions expressed in natural language to their assistants.

Overview of solutions

The patient data set is ingested in AEP and converted to a vertical (timeline) format. AEP refers to this data to generate cohorts and performs analysis. Measurements are variables that determine cohort intrusion, inclusion or exclusion conditions, and study characteristics.

The following diagram illustrates the solution architecture.

Major Assistant is a microservice that is deployed to Kubernetes on an AWS environment and accessed via the REST API. Data sent to the service is encrypted using Transport Layer Security 1.2 (TLS). When a user asks a question via the Assistant UI, if proven, and if possible, initiate a request containing the question and history of the previous message. The measurement assistant incorporates the question into the prompt template and calls the Amazon Bedrock API to invoke Anthropic’s Claude 3 Haiku. User-supplied prompts and requests sent to the Amazon Bedrock API are encrypted using TLS 1.2.

ADY has chosen to use Amazon Bedrock to operate large-scale language models (LLMS) for its vast selection of models, security attitudes, scalability and ease of use from multiple providers. did. Anthropic’s Claude 3 Haiku LLM has been found to be more efficient in runtime and cost than available alternatives.

The countermeasure assistant maintains a local knowledge base on AEPs from AETION science experts and incorporates this information into its responses as Guardrails. These guardrails ensure that the service returns valid instructions to the user and correct for logical inference errors that the core model may indicate.

The Measurement Assistant Prompt template contains the following information:

- A general definition of the tasks LLM is running.

- Explains the extraction of AEP documents, each of the measures types covered, input and output types, and how to use them.

- An in-context learning technique that includes semantically related resolved questions and answers for prompts.

- Rules that make LLM work in a specific way. For example, how to respond to unrelated questions, how to keep sensitive data safe and limit creativity in developing invalid AEP settings.

To streamline the process, the measurement assistant uses a two-part template.

- static – Fixed instructions used in user questions. These instructions cover a wide range of clear instructions from the measurement assistant.

- dynamic – Questions and answers are dynamically selected from the local knowledge base based on the semantic proximity to the user’s questions. These examples improve the quality of the generated answers by incorporating similar previously asked and answered questions into the prompt. This technique models a small, optimized, in-process knowledge base for search and extended generation (RAG) patterns.

Mixcebread’s Mxbai-embed-Large-V1 statement transformer has been fine-tuned to generate a local knowledge base of questions and answers and statement embeddings for user questions. Statement similarity is calculated by cosine similarity between vector embeddings.

The creation and maintenance of the Q&A pool involves people in the loop. Subject experts continuously test measurement assistants and use question-answer pairs to continuously improve to optimize the user experience.

result

The implementation of the ASOITIOAI feature allows users to use natural language queries and statements to explain the scientific intent of an algorithm to capture these variables in real data. Users can turn questions expressed in natural language into measurements in a second, in contrast to days, without the need for support staff or specialized training.

Conclusion

In this post, we discussed how AETION can use AWS services to streamline user paths, from defining scientific intent to running research and obtaining results. The countermeasure assistant allows scientists to conduct complex research, repeat research designs, and receive prompt guidance through rapid natural language queries.

ATION can be used to measure and scale innovative generation AI capabilities across product suites to help improve the user experience and ultimately accelerate the process of turning real-world data into real-world evidence We continue to improve our knowledge base.

With Amazon Bedrock, the future of innovation lies at your fingertips. Explore AWS Generation AI Application Builder to unlock new insights, build transformational solutions, and learn more about building generation AI capabilities to shape the future of today’s healthcare.

About the author

Javier Bertrand I am a senior machine learning engineer at AETION. His career focuses on natural language processing, with experience applying machine learning solutions to a variety of domains, from healthcare to social media.

Javier Bertrand I am a senior machine learning engineer at AETION. His career focuses on natural language processing, with experience applying machine learning solutions to a variety of domains, from healthcare to social media.

Ornela Xhelili I am ADY’s staff machine learning architect. Ornela specializes in natural language processing, predictive analytics, and MLOPS, and holds a Master’s degree in Science in Statistics. Over the past eight years, Ornela has built AI/ML products for technology startups across a range of domains, including healthcare, finance, analytics, and e-commerce.

Ornela Xhelili I am ADY’s staff machine learning architect. Ornela specializes in natural language processing, predictive analytics, and MLOPS, and holds a Master’s degree in Science in Statistics. Over the past eight years, Ornela has built AI/ML products for technology startups across a range of domains, including healthcare, finance, analytics, and e-commerce.

Prashidi Chaburi He is AETION’s Product Manager and leads AETION evidence platform, core analysis, and AI/ML capabilities. He has extensive experience in building quantitative and statistical methods to solve human health problems.

Prashidi Chaburi He is AETION’s Product Manager and leads AETION evidence platform, core analysis, and AI/ML capabilities. He has extensive experience in building quantitative and statistical methods to solve human health problems.

Mikhail Weinstein I’m a solution architect with Amazon Web Services. Mikhail works with Healthcare Life Sciences customers and specializes in data analytics services. Mikhail has over 20 years of industry experience covering a wide range of technologies and sectors.

Mikhail Weinstein I’m a solution architect with Amazon Web Services. Mikhail works with Healthcare Life Sciences customers and specializes in data analytics services. Mikhail has over 20 years of industry experience covering a wide range of technologies and sectors.