Amazon SageMaker is a cloud-based machine learning (ML) platform in the AWS ecosystem that provides developers with a seamless and convenient way to build, train, and deploy ML models. Widely used by data scientists and ML engineers across industries, this robust tool provides high availability and uninterrupted access to users. When using SageMaker, your environment resides within a SageMaker domain. A SageMaker domain includes important components such as Amazon Elastic File System (Amazon EFS) for storage, user profiles, and various security settings. This comprehensive setup allows users to store, share, and access notebooks, Python files, and other important artifacts, enabling collaboration.

In 2023, SageMaker announced the release of the new SageMaker Studio, which offers two new types of applications: JupyterLab and Code Editor. The old SageMaker Studio has been renamed to SageMaker Studio Classic. Unlike other applications that share a single storage volume in SageMaker Studio Classic, each instance of JupyterLab and Code Editor has its own Amazon Elastic Block Store (Amazon EBS) volume. For more information about this architecture, see New – Code-OSS VS Code open source-based code editor is now available in Amazon SageMaker Studio. Another new feature is the ability to bring your own EFS instances, which allows you to attach and detach custom EFS instances.

A new SageMaker Studio-specific SageMaker domain consists of the following entities:

- User profile

- Applications such as JupyterLab, code editor, RStudio, Canvas, MLflow, etc.

- Various security, application, policy, and Amazon Virtual Private Cloud (Amazon VPC) configurations

As a precautionary measure, some customers may wish to ensure continued operation of SageMaker even in the event of a SageMaker service outage in their region. This solution leverages Amazon EFS’s built-in cross-region replication capabilities to serve as a robust disaster recovery mechanism and provide continuous, uninterrupted access to your SageMaker domain data across multiple regions. Replicating your data and resources across regions provides business continuity and disaster recovery capabilities by protecting against regional outages and providing additional protection against natural disasters and unexpected technical failures. This setup is especially important for mission-critical and time-sensitive workloads, so data scientists and ML engineers can continue working seamlessly without interruption.

The solution described in this post focuses on the new SageMaker Studio experience, specifically the private JupyterLab and code editor spaces. Although the code base does not include shared spaces, the solution can easily be extended with the same concepts. This post provides a step-by-step process to seamlessly migrate and secure a new SageMaker domain in Amazon SageMaker Studio from your active AWS region to another AWS Region, including all associated user profiles and files. I will. By using a combination of AWS services, you can effectively implement this functionality and overcome the current limitations within SageMaker.

Solution overview

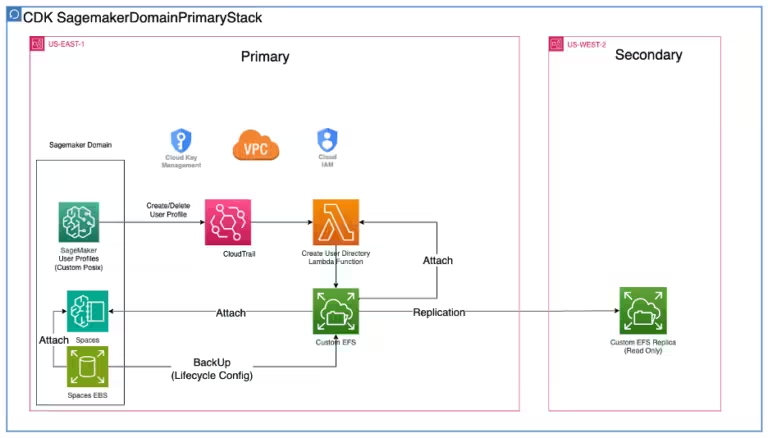

In active/passive mode, your SageMaker domain infrastructure is only provisioned in your primary AWS Region. Data backup is done in near real-time using Amazon EFS replication. Figure 1 shows this architecture.

Figure 1:

If the primary region goes down, a new domain is launched in the secondary region and an AWS Step Functions workflow is run to restore the data, as shown in Figure 2.

Figure 2:

In active/active mode, shown in Figure 3, the SageMaker domain infrastructure is provisioned in two AWS Regions. Data backup is done in near real-time using Amazon EFS replication. Data synchronization is completed by Step Functions workflows, whose frequency can be invoked on demand, by schedule, or by event.

Figure 3:

Complete code samples can be found in the GitHub repository.

Click here to open the AWS console and follow the instructions.

Leveraging all the benefits of an upgraded SageMaker domain, we have developed a fast and robust cross-AWS Region disaster recovery solution that uses Amazon EFS to back up and restore user data stored in SageMaker Studio applications. . In addition, domain user profiles and their respective custom Posix are managed by YAML files in the AWS Cloud Development Kit (AWS CDK) code base that ensure that the domain entities in the secondary AWS Region are identical to those in the primary AWS Region. Masu. Creating users in the AWS Management Console is not considered here because user-level custom EFS instances can only be configured through programmatic API calls.

backup

Backups are performed within the primary AWS Region. There are two types of sources: EBS spaces and custom EFS instances.

For EBS spaces, a lifecycle configuration is attached to JupyterLab or a code editor for the purpose of backing up files. Every time a user opens your application, Lifecycle Configuration takes snapshots of your EBS space and stores them in your custom EFS instance. rsync Instructions.

For custom EFS instances, it automatically replicates to a read-only replica in a secondary AWS Region.

recovery

For recovery in a secondary AWS Region, a SageMaker domain with the same user profile and space is deployed, and an empty custom EFS instance is created and attached to it. Next, an Amazon Elastic Container Service (Amazon ECS) task runs to copy all the backup files to an empty custom EFS instance. The final step runs the lifecycle configuration script and restores the Amazon EBS snapshot before launching the SageMaker space.

Prerequisites

Complete the following prerequisite steps:

- Clone the GitHub repository to your local machine by running the following command in Terminal:

- Change to your project’s working directory and set up a Python virtual environment.

- Install the required dependencies.

- Bootstrap your AWS account and set up an AWS CDK environment in both Regions.

- Run the following code to synthesize the AWS CloudFormation template.

- Configure the required arguments in the constants.py file.

- Set the primary region to deploy your solution.

- Set up a secondary region to recover your primary domain.

- Replace the account ID variable with your AWS account ID.

Deploy the solution

To deploy the solution, follow these steps:

- Deploy your primary SageMaker domain.

- Deploy a secondary SageMaker domain.

- Deploy a disaster recovery Step Functions workflow.

- Start the application with the custom EFS instance attached and add files to the application’s EBS volume and the custom EFS instance.

Test the solution

To test your solution, follow these steps:

- Add test files using the code editor or JupyterLab in your primary region.

- Stop and restart the application.

This launches the lifecycle configuration script and causes your application to take an Amazon EBS snapshot.

- Run the disaster recovery Step Functions workflow in the Step Functions console in the secondary region.

The following diagram shows the steps in the workflow.

- Launch SageMaker Studio for the same user in the SageMaker console in the secondary region.

Files are backed up either in the code editor or in JupyterLab.

cleaning

Please clean up the resources you created as part of this post to avoid ongoing charges.

- Stop all code editors and JupyterLab apps

- Remove all CDK stacks

conclusion

SageMaker provides a robust and highly available ML platform that enables data scientists and ML engineers to efficiently build, train, and deploy models. For critical use cases, implementing a comprehensive disaster recovery strategy makes your SageMaker domain more resilient and ensures continuous operations even in the event of regional outages. This post provides a detailed solution for migrating and securing your SageMaker domain, including user profiles and files, from one active AWS Region to another passive or active AWS Region. This solution uses a strategic combination of AWS services such as Amazon EFS, Step Functions, and AWS CDK to overcome current limitations within SageMaker Studio and provide continuous access to valuable data and resources. provide access. Regardless of whether you choose an active/passive or active/active architecture, this solution provides a robust and resilient backup and recovery mechanism, increasing protection against natural disasters, technical failures, and regional outages. I will. Maintain business continuity and uninterrupted access to your SageMaker domain, even when the unexpected occurs, and confidently protect your mission-critical and time-sensitive workloads with this comprehensive guide. can.

For more information about disaster recovery on AWS, see:

About the author

Feng Jingjiao is a Machine Learning Engineer with AWS Professional Services. He focuses on designing and implementing large-scale generative AI and traditional ML pipeline solutions. He specializes in FMOps, LLMOps, and distributed training.

Feng Jingjiao is a Machine Learning Engineer with AWS Professional Services. He focuses on designing and implementing large-scale generative AI and traditional ML pipeline solutions. He specializes in FMOps, LLMOps, and distributed training.

nick bisso is a Machine Learning Engineer with AWS Professional Services. He uses data science and engineering to solve complex organizational and technical challenges. Additionally, build and deploy AI/ML models on the AWS Cloud. His passions include travel and a penchant for diverse cultural experiences.

nick bisso is a Machine Learning Engineer with AWS Professional Services. He uses data science and engineering to solve complex organizational and technical challenges. Additionally, build and deploy AI/ML models on the AWS Cloud. His passions include travel and a penchant for diverse cultural experiences.

natasha chill He is a cloud consultant at the Generative AI Innovation Center, specializing in machine learning. With a strong background in ML, she is currently focused on developing proof-of-concept solutions for generative AI and driving innovation and applied research within GenAIIC.

natasha chill He is a cloud consultant at the Generative AI Innovation Center, specializing in machine learning. With a strong background in ML, she is currently focused on developing proof-of-concept solutions for generative AI and driving innovation and applied research within GenAIIC.

Katherine Fenn is a Cloud Consultant on the Data and ML team at AWS Professional Services. She has extensive experience building full-stack applications for AI/ML use cases and LLM-driven solutions.

Katherine Fenn is a Cloud Consultant on the Data and ML team at AWS Professional Services. She has extensive experience building full-stack applications for AI/ML use cases and LLM-driven solutions.