Fine-tuning a pre-trained large language model (LLM) allows users to customize the model to perform better on domain-specific tasks or align more closely with human preferences. It is a continuous process to keep the fine-tuned model accurate and effective in changing environments, to adapt to the data distribution shift (concept drift) and prevent performance degradation over time. Continuous fine-tuning also enables models to integrate human feedback, address errors, and tailor to real-world applications. You can use supervised fine-tuning (SFT) and instruction tuning to train the LLM to perform better on specific tasks using human-annotated datasets and instructions. When you have user feedback to the model responses, you can also use reinforcement learning from human feedback (RLHF) to guide the LLM’s response by rewarding the outputs that align with human preferences.

Precise and responsible outputs from fine-tuned LLMs require big efforts from subject matter experts (SMEs). The manual annotation of extensive training data for fine-tuning by human SMEs and collecting user feedback to align LLM responses with human preferences are both resource-heavy and time-intensive. Also, the continuous fine-tuning process requires orchestrating the multiple steps of data generation, LLM training, feedback collection, and preference alignments with scalability, resiliency, and resource efficiency. To address these challenges, we present an innovative continuous self-instruct fine-tuning framework that streamlines the LLM fine-tuning process of training data generation and annotation, model training and evaluation, human feedback collection, and alignment with human preference. This framework is designed as a compound AI system to drive the fine-tuning workflow for performance improvement, versatility, and reusability.

In this post, we introduce the continuous self-instruct fine-tuning framework and its pipeline, and present how to drive the continuous fine-tuning process for a question-answer task as a compound AI system. We use DSPy (Declarative Self-improving Python) to demonstrate the workflow of Retrieval Augmented Generation (RAG) optimization, LLM fine-tuning and evaluation, and human preference alignment for performance improvement.

Overview of the continuous self-instruct fine-tuning framework

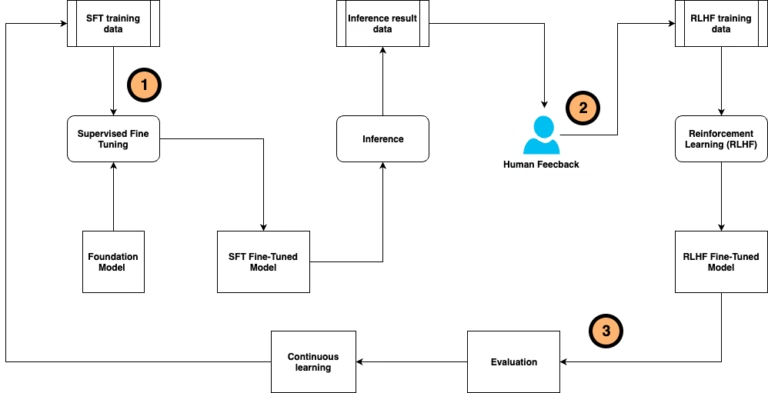

The continuous self-instruct fine-tuning framework drives a workflow to customize the foundation model (FM) using human-labeled training samples and human feedback after model inference. This workflow runs on a continuous basis to be adaptive to a changing environment. The following diagram illustrates the workflow.

The workflow consists of the following steps:

- Self-instruct supervised fine-tuning – First, we use a human-labeled training dataset to adapt the FM to tasks in a specific domain. Instruction tuning is a popular approach in domain-specific LLM fine-tuning, which trains the FM to follow instructions for a specific task rather than generating the next texts. To address the challenges of the lack of human efforts for data labeling, annotation, and validation, we designed a self-instruct fine-tuning method to synthetically generate training labels by the LLM from a small volume of high-quality human-annotated samples. This process scales up the training dataset used for fine-tuning the FM into a custom LLM.

- Human preference alignment – After the model is deployed in the production environment, the process moves into the human-in-the-loop workflow, in which we collect user feedback including satisfaction scores and comments on model response. The human feedback data is not only used for model performance and hallucination measurement, but is also used to further fine-tune the custom model in Step 1 through RLHF. Likewise, to address the challenges of lack of human feedback data, we use LLMs to generate AI grades and feedback that scale up the dataset for reinforcement learning from AI feedback (RLAIF). There are various techniques of preference alignment, including proximal policy optimization (PPO), direct preference optimization (DPO), odds ratio policy optimization (ORPO), group relative policy optimization (GRPO), and other algorithms, that can be used in this process.

- Evaluation and continuous learning – The model customization and preference alignment is not a one-time effort. We need to keep monitoring and evaluating the model performance, and restart the process in case of concept shift or model decay.

The overall workflow consists of multiple steps of synthetic data generation, LLM training, feedback collection, preference alignment, and evaluation that involves multiple components and multiple LLMs. In the next section, we discuss using a compound AI system to implement this framework to achieve high versatility and reusability.

Compound AI system and the DSPy framework

With the rise of generative AI, scientists and engineers face a much more complex scenario to develop and maintain AI solutions, compared to classic predictive AI. The paper The Shift from Models to Compound AI Systems highlights that state-of-the-art AI results are increasingly obtained by compound systems with multiple components, not just monolithic models. Compound AI systems are systems that implement AI tasks by combining multiple interacting components. These components can include multiple calls to models, retrievers, or external tools. The following diagram compares predictive AI to generative AI.

The concept of a compound AI system enables data scientists and ML engineers to design sophisticated generative AI systems consisting of multiple models and components. You can use a module to incorporate prompt engineering and in-context learning to improve RAG performance, and also design a data architecture with tools to gather external data. You can also build an agentic architecture with multiple LLMs, fine-tune the model to achieve higher performance, and orchestrate the LLM access. Besides the efficiency in system design, the compound AI system also enables you to optimize complex generative AI systems, using a comprehensive evaluation module based on multiple metrics, benchmarking data, and even judgements from other LLMs. The optimization is on the holistic end-to-end solution, rather than on each component separately.

To efficiently build and optimize compound AI systems, we introduce DSPy, an open source Python framework for developers to build LLM applications using modular and declarative programming, whether you’re building simple classifiers, sophisticated RAG pipelines, or agentic workflows. It provides algorithms for optimizing LLMs’ prompts and weights, and automates the prompt tuning process, as opposed to the trial-and-error approach performed by humans. DSPy supports iteratively optimizing all prompts involved against defined metrics for the end-to-end compound AI solution.

The DSPy lifecycle is presented in the following diagram in seven steps. It separates the flow of your program (modules) from the parameters (language model prompts and weights) of each step. These modules define the system behavior in a portable, declarative way. The first four steps cover the DSPy programming stage, including defining your task and its constraints, exploring a few examples, and using that to inform your initial pipeline design. When your system works reasonably well, you can run the DSPy evaluation stage (Steps 5 and 6) to collect an initial development set, define your DSPy metric, and use these to iterate on your system more systematically. Afterwards, DSPy introduces new optimizers (compilers) in Step 7, with language model-driven algorithms to tune LLM prompts and weights, based on predefined evaluation metrics.

RAG pipeline with continuous fine-tuning in a compound AI system

In this post, we provide an example of a question-answer task, using a RAG pipeline along with the continuous self-instruct fine-tuning framework. We build this as a compound AI system and use DSPy to drive the RAG inference, prompt optimization, LLM fine-tuning, and performance evaluation. The overall workflow is shown in the following diagram.

The flow starts from a standard RAG pipeline, followed by a few optimizations on the prompts and the RAG retriever. Then we generate the synthetic training dataset from the RAG knowledge base to fine-tune the generator LLM using RAG for performance improvement. Lastly, we use a separate LLM to generate feedback on the fine-tuned model responses, and use it to conduct the preference alignment training by DPO and PPO. The question-answer outputs from each step are measured by the underlying LLM-as-a-judge evaluation module. In this way, we demonstrate the effectiveness of the compound AI system for the continuous optimizing of the pipeline through RAG optimization and the fine-tuning framework.

In the next sections, we demonstrate how to build this workflow, including the RAG pipeline, optimization, instruction fine-tuning, preference alignment, and model evaluation, into a compound AI system using an Amazon SageMaker notebook instance with the DSPy framework and LLMs on Amazon Bedrock. The code from this post and more examples are available in the GitHub repository.

Prerequisites

To create and run this compound AI system in your AWS account, complete the following prerequisites:

- Create an AWS account if you don’t already have one.

- Set up a SageMaker notebook instance.

- Open JupyterLab in this newly created instance.

- Clone the GitHub repository and follow the steps explained in the README.

- Navigate to the cloned repository and open the notebook folder.

- Enable access to models hosted on Amazon Bedrock. For this post, we enable Anthropic’s Claude 3 Sonnet, Mistral 7B, and Meta Llama 8B.

Dataset

For the question-answering task, we use the Contract Understanding Atticus Dataset (CUAD), an open legal contract review dataset created with dozens of legal experts from The Atticus Project, which consists of over 13,000 annotations. The synthetic data generation notebook automatically downloads the CUAD_v1 ZIP file and places it in the required folder named cuad_data.

In case of any issues, you can alternately download the dataset yourself by following the steps in the README file and store the dataset inside a folder within the SageMaker notebook instance, and use it to perform the steps in the next section.

Prepare question-answer pairs

The first step is to prepare question-answer pairs from the CUAD document by running synthetic data generation.

We use Anthropic’s Claude v3 Sonnet on Amazon Bedrock to synthetically generate question-answer pairs to infer the RAG pipeline in the compound AI system, to demonstrate the improved accuracy after RAG optimization and model fine-tuning. The generated datasets are in the format of question-answer pairs along with the context (context, question, answer) from the document. We use the question to infer the RAG pipeline and use the answer as ground truth to evaluate the inference accuracy. Additionally, the question-answer pairs are used as training samples for the model fine-tuning. The following is a sample dataset triplet with context and a question-answer pair.

| Context (Snippet from PDF file) | Question | Answer |

|

THIS STRATEGIC ALLIANCE AGREEMENT (“Agreement”) is made and entered into as of November 6, 2016 (the “Effective Date”) by and between Dialog Semiconductor (UK) Ltd., a corporation organized under the laws of England and Wales, having its principal office at 100 Longwater Avenue, Green Park, Reading, RG2 6GP, United Kingdom (“DIALOG”) and Energous Corporation, a Delaware corporation, having its principal office at 3590 North First Street, Suite 210, San Jose, CA 95134 (“ENERGOUS”) |

What is the date of the contract? | November 6, 2016 |

Create a RAG pipeline

We implement a standard RAG pipeline with DSPy using the following components to create the vector database, set up context retrieval, and generate the answer:

- Configure DSPy to use LLMs on Amazon Bedrock as the RAG generator model:

- Process the dataset to generate logical and syntactically readable chunks. The size and overlap percentage can be empirically determined based on the dataset. For more flexibility, you can generate multiple files from the dataset file and make one file one chunk.

- To set up a RAG retriever, we select ChromaDB as a vector store, and use DSPy’s ChromadbRM module as the retriever model:

- Using these components, we orchestrate a DSPy RAG pipeline to clean the context, generate the answer, and use the LLM-as-a-judge to score the generated answer with respect to the ground truth:

RAG optimization with DSPy

The next step is to perform RAG optimization with DSPy. DSPy provides the Optimizer module, an algorithm that can tune the parameters of a DSPy program (the prompts and language model weights) to maximize the metrics you specify. It takes in a training set to bootstrap the selective training examples, and is based on a metric function that measures proximity to or matches against the ground truth. With these, we can compile the RAG pipeline module with a defined optimizer instance to conduct the optimization.

In this post, we use DSPy Optimizer to learn how to generate the prompt to improve the RAG response accuracy. Because our dataset size is low (fewer than 100 examples), we select the BootstrapFewShot teleprompter to compile the RAG prompts and overall pipeline, and use the synthetic dataset with ground truth and the LLM-as-a-judge metric function we defined in the previous sections:

The context retrieval is crucial to the overall RAG accuracy. To evaluate the RAG optimization we’ve described, we create a retriever evaluation by the LLM-as-a-judge to understand how well the retriever is able to pull out the relevant chunks for the incoming user question. The LLM judge is defined in the RetrievalJudge class:

Then we define the metric to measure the retrieval by using the RetrievalJudge, and use the DSPy Evaluate module to generate the accuracy score for retrieval:

Configure the continuous fine-tuning framework

After the RAG optimization, the compound AI system has the instruction tuning and preference alignment modules, driven by the continuous fine-tuning framework. This includes using the synthetically generated dataset to train the LLM to follow question-answer instructions by SFT, and generating feedback of RAG responses by AI (another LLM) used for RLAIF with PPO and preference alignment with DPO and ORPO. In this step, we use Parameter Efficient Fine-Tuning (PEFT) with Low-Rank Adaptation (LoRA) to reduce the requirement of compute resources and accelerate the training process.

At the time of writing, the DSPy Optimization module supports distillation of a prompt-based DSPy program into LLM weight updates using BootstrapFinetune, and does not yet support the fine-tuning methods we defined in the compound AI system. Therefore, we conducted the fine-tuning (instruction tuning and preference alignment) on a Meta Llama 3 8B model separately; refer to the following GitHub repository for more details. With the compound AI system design, we are able to take the fine-tuning results back into the DSPy pipeline, use the LLM-as-a-judge evaluation function to generate the accuracy scores, and benchmark with the standard and optimized RAG inferences. This demonstrates the flexibility and interoperability of the compound AI system, which allows us to seamlessly replace one module with an external component without requiring changes to the entire pipeline.

The following diagram illustrates the workflow.

Define an evaluation approach with DSPy

DSPy provides an Evaluate module for evaluating the compound AI system output by using user-defined metrics. In this post, we use LLM-as-a-judge to evaluate the system output and create the corresponding metrics for benchmarking the accuracy of standard RAG, optimized RAG, and fine-tuned models. Complete the following steps:

- Load the dataset for evaluation in the Example data type. Examples are similar to Python dictionaries but with added utilities such as the dspy.Prediction as a return value. For example:

- Define the LLM-as-a-judge class to adjudicate whether the predicted answer semantically matches the ground truth of the answer. For example, the following FactualityJudge_1 class provides a score between 0 and 1; 0 means a complete mismatch and 1 means a perfect match.

- Define the evaluation metrics from the LLM judge, using DSPy metrics, to mark whether the predicted answer is true or not. For example, the following function returns the accuracy score based on the output of FactualityJudge_1:

- Use the

dspy.Evaluatemodule to generate an accuracy score using the LLM-as-a-judge metrics defined in the previous step:

This evaluation process should be conducted on a continuous basis in the compound AI system driven by self-instruct fine-tuning, to make sure the overall performance remains stable despite the changes in the environment or the introduction of new data.

Benchmark RAG and LLM fine-tuning with DSPy

We benchmark the approaches presented in this post using the LLM-as-a-judge evaluation function defined in the previous section with the following settings.

The benchmarking is across five methods: standard RAG, optimized RAG, fine-tuning LLMs by instruction tuning, and fine-tuning LLMs by DPO and ORPO trained LLMs based on AIF. For each method, the LLM judge provides a decimal accuracy score in the range of 0 and 1.

The standard RAG uses Amazon Titan Text Embedding V2 for the embedding model, and Anthropic’s Claude 3 Haiku model for the generator model. The RAG compilation uses 32 question-answer pairs to optimize the prompts. The same dataset is used for inference. The fine-tuning by SFT, DPO, and ORPO are performed on the Meta Llama 3 8B FM, using training samples synthetically generated from CUAD document.

The results are presented in the following tables and charts. The different methods demonstrate different levels of improvement. The improvement is calculated in percentage by (accuracy of new method – accuracy of standard RAG)/(accuracy of standard RAG)*100%.

The optimized RAG by DSPy improved the accuracy and reduced the hallucination.

| Standard RAG with Claude 3 Haiku | RAG with Claude 3 Haiku optimized by DSPy | Improvement % | |

| Accuracy by LLM Judge (0-1) | 0.3969 | 0.6656 | 67.70% |

| Standard RAG with Claude 3 Sonnet | RAG with Claude 3 Sonnet optimized by DSPy | Improvement % | |

| Accuracy by LLM Judge (0-1) | 0.3031 | 0.6375 | 110.33% |

The custom LLM trained by SFT yielded higher accuracy than the standard RAG.

| Standard RAG with Claude 3 Haiku | SFT tuned Meta Llama 3 8B | Improvement % | |

| Accuracy by LLM Judge (0-1) | 0.3969 | 0.4813 | 21.26% |

| Standard RAG with Claude 3 Sonnet | SFT tuned Meta Llama 3 8B | Improvement % | |

| Accuracy by LLM Judge (0-1) | 0.3031 | 0.4813 | 58.79% |

The custom LLM through preference alignment from human and AI feedback (DPO and ORPO) further improved the model performance. The fine-tuned small size model (Meta Llama 3 8B) outperformed the standard RAG pipeline with the medium size (Anthropic’s Claude Haiku) and larger size (Anthropic’s Claude Sonnet) generator model, and was comparable with the prompt-optimized RAG using ground truth data.

| Standard RAG with Claude 3 Haiku | DPO tuned Meta Llama 3 8B | Improvement % | ORPO tuned Meta Llama 3 8B | Improvement % | |

| Accuracy by LLM Judge (0-1) | 0.3969 | 0.6719 | 69.29% | 0.6812 | 71.63% |

| Standard RAG with Claude 3 Sonnet | DPO tuned Meta Llama 3 8B | Improvement % | ORPO tuned Meta Llama 3 8B | Improvement % | |

| Accuracy by LLM Judge (0-1) | 0.3031 | 0.6719 | 121.68% | 0.6812 | 124.74% |

The following charts compare the accuracy across all tested methods.

The preceding results were generated from a small dataset (32 question-answer pairs). You can use a larger sample set with more question-answer pairs to conduct the benchmarking and compare your own results.

Clean up

Make sure to clean up the following resources to avoid incurring additional costs:

- Delete Amazon Simple Storage Service (Amazon S3) buckets created for data storage and resource sharing.

- Back up the Jupyter notebooks in the SageMaker notebook instance.

- Shut down and delete the SageMaker notebook instance.

Cost considerations

Consider the following costs from the solution deployed on AWS:

- You will incur charges for LLM inference on Amazon Bedrock. For more details, refer to Amazon Bedrock pricing.

- You will incur charges for storing files in S3 buckets. For more details, refer to Amazon S3 pricing.

- You will incur charges for your SageMaker notebook instance. For more details, refer to Amazon SageMaker pricing.

Conclusion

In this post, we presented the continuous self-instruct fine-tuning framework as a compound AI system implemented by the DSPy framework. The framework first generates a synthetic dataset from the domain knowledge base and documents for self-instruction, then drives model fine-tuning through SFT, and introduces the human-in-the-loop workflow to collect human and AI feedback to the model response, which is used to further improve the model performance by aligning human preference through reinforcement learning (RLHF/RLAIF).

We demonstrated the framework for a question-answer task with a RAG pipeline, which improved the end-to-end response accuracy. The workflow is implemented by the DSPy framework; the overall strategy is to use the dspy.Module to connect all the components (RAG pipeline, prompt optimization, LLMs fine-tuned by SFT and RLHF/RLAIF, performance evaluation) together into a compound AI system. Each module can be seamlessly maintained, updated, and replaced without affecting other components in the system. This robust and versatile system design strengthens control and trust through modular design, and increases flexibility and adaptability to changing environments and data sources.

You can implement this continuous fine-tuning framework for LLM performance improvement for your own business use cases, with a compound AI system that provides high flexibility and interoperability. For more details, follow the examples in our GitHub repository.

About the Authors

Yunfei Bai is a Principal Solutions Architect at AWS. With a background in AI/ML, data science, and analytics, Yunfei helps customers adopt AWS services to deliver business results. He designs AI/ML and data analytics solutions that overcome complex technical challenges and drive strategic objectives. Yunfei has a PhD in Electronic and Electrical Engineering. Outside of work, Yunfei enjoys reading and music.

Yunfei Bai is a Principal Solutions Architect at AWS. With a background in AI/ML, data science, and analytics, Yunfei helps customers adopt AWS services to deliver business results. He designs AI/ML and data analytics solutions that overcome complex technical challenges and drive strategic objectives. Yunfei has a PhD in Electronic and Electrical Engineering. Outside of work, Yunfei enjoys reading and music.

Shayan Ray is an Applied Scientist at Amazon Web Services. His area of research is all things natural language (like NLP, NLU, and NLG). His work has been focused on conversational AI, task-oriented dialogue systems, and LLM-based agents. His research publications are on natural language processing, personalization, and reinforcement learning.

Shayan Ray is an Applied Scientist at Amazon Web Services. His area of research is all things natural language (like NLP, NLU, and NLG). His work has been focused on conversational AI, task-oriented dialogue systems, and LLM-based agents. His research publications are on natural language processing, personalization, and reinforcement learning.

Jose Cassio dos Santos Junior is a Senior Data Scientist member of the MLU team. He is responsible for Curriculum Development for Advanced Modules. As a previous Senior Data Scientist on the AWS LATAM Professional Services Data Science team, he has over 20 years of experience working as a software engineer and more than 10 years of teaching experience at colleges and as an instructor for Linux certification preparation and Microsoft Innovation Center bootcamps. As a business process management expert, he participated in BPO projects for more than 7 years. He holds a Master’s degree in Computer Engineering, a Bachelor’s degree in Physics, and a Bachelor’s degree in Business Administration, specialized in IT Quantitative Methods.

Jose Cassio dos Santos Junior is a Senior Data Scientist member of the MLU team. He is responsible for Curriculum Development for Advanced Modules. As a previous Senior Data Scientist on the AWS LATAM Professional Services Data Science team, he has over 20 years of experience working as a software engineer and more than 10 years of teaching experience at colleges and as an instructor for Linux certification preparation and Microsoft Innovation Center bootcamps. As a business process management expert, he participated in BPO projects for more than 7 years. He holds a Master’s degree in Computer Engineering, a Bachelor’s degree in Physics, and a Bachelor’s degree in Business Administration, specialized in IT Quantitative Methods.