Machine learning (ML) helps organizations increase revenue by optimizing core business functions such as supply and demand forecasting, customer churn prediction, credit risk scoring, pricing, and shipment delay prediction. , help your business grow and reduce costs.

Traditional ML development cycles take weeks to months and require little data science understanding and ML development skills. Business analysts’ ideas to use ML models often sit on a backlog for long periods of time due to the bandwidth and data preparation efforts of data engineering and data science teams.

This post details the business use case for banking institutions. A bank’s financial or business analyst can easily determine whether a customer’s loan will be paid in full, written off, or in progress using machine learning models that best fit the business problem at hand. Explain how to make predictions. Analysts build machine learning models that can easily retrieve the data they need, use natural language to clean and fill in missing data, and ultimately accurately predict loan status as output. can be deployed. Everything doesn’t have to be a machine. Learning experts to do so. Analysts can also quickly create business intelligence (BI) dashboards using ML model results within minutes of receiving a prediction. Learn about the services we use to make this happen.

Amazon SageMaker Canvas is a web-based visual interface for building, testing, and deploying machine learning workflows. This allows data scientists and machine learning engineers to work with data and models, visualize their work, and share it with others with just a few clicks.

SageMaker Canvas also integrates with Data Wrangler, which helps you create data flows and prepare and analyze data. Data Wrangler includes a built-in chat option for data preparation, allowing you to explore, visualize, and transform your data in a conversational interface using natural language.

Amazon Redshift is a fast, fully managed, petabyte-scale data warehouse service that is cost-effective and allows you to efficiently analyze all your data using your existing business intelligence tools.

Amazon QuickSight powers data-driven organizations with hyperscale integration (BI). QuickSight enables all users to meet a variety of analytical needs from the same source of truth through modern, interactive dashboards, paginated reports, embedded analytics, and natural language queries.

Solution overview

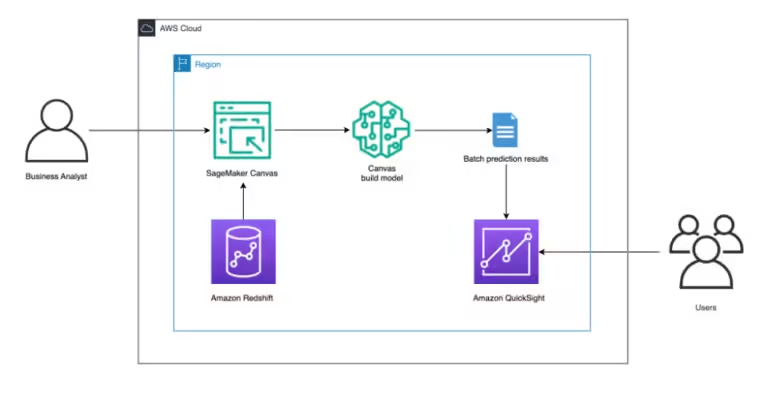

The solution architecture below shows:

- Business analyst signing into SageMaker Canvas.

- Business analysts connect to the Amazon Redshift data warehouse and pull the data they need into SageMaker Canvas for use.

- Instruct SageMaker Canvas to build a predictive analytics ML model.

- Once the model is built, get batch prediction results.

- Sends the results to QuickSight for further analysis by the user.

Prerequisites

Before you begin, ensure that the following prerequisites are met:

- An AWS account and role with AWS Identity and Access Management (IAM) permissions to deploy the following resources:

- The role of IAM.

- Provisioned or serverless Amazon Redshift data warehouse. This post uses a provisioned Amazon Redshift cluster.

- SageMaker domain.

- QuickSight account (optional).

- Basic knowledge of SQL Query Editor.

Set up an Amazon Redshift cluster

I created a CloudFormation template to set up an Amazon Redshift cluster.

- Deploy the Cloudformation template to your account.

- Enter the stack name and select Next Run it twice and leave the remaining parameters at their defaults.

- On the review page, scroll down to ability Select a section and I acknowledge that AWS CloudFormation may create IAM resources.

- choose Creating a stack.

The stack will run for 10-15 minutes. Once completed, you can view the output for the parent stack and nested stacks, as shown in the following image.

parent stack

nested stack

sample data

As a workshop for bank customers and their loans, we will use public datasets hosted and maintained by AWS in our own S3 buckets. This dataset includes customer demographic data and loan terms.

Implementation steps

Load data into your Amazon Redshift cluster

- Connect to your Amazon Redshift cluster using Query Editor v2. To go to the Amazon Redshift Query Editor v2, follow the instructions at Open Query Editor v2.

- Create a table in your Amazon Redshift cluster using the following SQL command.

- data

loan_custTable using:COPYInstructions:

- Query the table to see what your data looks like.

Set up chat for data

- To use the data chat option in Sagemaker Canvas, you must enable it in Amazon Bedrock.

- Open the AWS Management Console and navigate to Amazon Bedrock. model access in the navigation pane.

- choose Enable specific modelsbottom humanselect Claude and select Next.

- Review your selections and submit.

- Open the AWS Management Console and navigate to Amazon Bedrock. model access in the navigation pane.

- Navigate to the Amazon SageMaker service from the AWS Management Console and select canvas and click open canvas.

- choose dataset From the navigation pane, Import data Click on the dropdown to select Tabular format.

- for Dataset nameinput

redshift_loandataand select create

- On the next page, select data source and select redshift as a source. under redshiftselect + Add connection.

- Enter the following details to establish an Amazon Redshift connection.

- cluster identifier: Copy.

ProducerClusterNameFrom CloudFormation’s nested stack output. - You can refer to the screenshot above. nested stackHere you will see the cluster identifier output.

- database name: input

dev. - database user: input

awsuser. - Unload the IAM role ARN: Copy.

RedshiftDataSharingRoleNameFrom nested stack output. - connection name: input

MyRedshiftCluster. - choose Adding a connection.

- cluster identifier: Copy.

- Once the connection is created,

publicDrag the schemaloan_custDisplay the table in the editor and select Creating a dataset.

- Please select

redshift_loandataSelect a dataset Create a dataflow.

- input

redshift_flowPlease select a name create.

- After creating the flow, select Chat for data preparation.

- Type the following in the text box

summarize my dataand select run arrow.

- The output should look like this:

- Now you can prepare your dataset using natural language. input

Drop ssn and filter for ages over 17Click run arrow. You can see that I was able to handle both steps. You can also view the executed PySpark code. To add these steps as a dataset transformation, Add to step.

- Rename the step to

drop ssn and filter age > 17choose updateSelect Creating a model.

- Export the data and build the model: Enter

loan_data_forecast_datasetfor Dataset namefor model name, inputloan_data_forecastfor Type of problem, schoosePredictive analysis(target column), select .loan_statusClick Exporting and creating models.

- Make sure the correct target column and model type are selected and click . quick build.

- The model is currently being created. It typically takes 14 to 20 minutes, depending on the size of the dataset.

- Once the model is trained, you are routed to the next page. analyze tab. Here you can see the average prediction accuracy and the influence of columns on the prediction results. Please note that due to the stochastic nature of the ML process, your actual numbers may differ from those shown in the following image.

Use the model to make predictions

- Now let’s use this model to predict the future status of the loan. choose predict.

- under Please select a prediction typeselect batch predictionSelect manual.

- Then select Loan data_prediction_dataset Click from the dataset list, Generate a prediction.

- Once the batch prediction is complete, you will see something like this: Click the breadcrumb menu next to . ready Select a status and click preview Click to view the results.

- You can now view your predictions and download them as CSV.

- You can also generate a single prediction for one row of data at a time. under Please select a prediction typeselect single prediction Then change the values of the required input fields and select update.

Analyze your predictions

Here we’ll show you how to use Quicksight to visualize predictive data from your SageMaker canvas and gain further insights from your data. SageMaker Canvas is directly integrated with QuickSight, a cloud-powered business analytics service that allows employees across your organization to build visualizations, perform ad hoc analysis, and turn data into business insights anytime, on any device. can be obtained quickly.

- With the preview page displayed, Send to Amazon QuickSight.

- Enter the QuickSight username to share the results with.

- choose send You will see a confirmation that your results were successfully submitted.

- Now you can create a QuickSight dashboard for forecasting.

- Navigate to the QuickSight console by typing “QuickSight” in the console service search bar and select it quick sight.

- under datasetselect the SageMaker Canvas dataset you just created.

- choose Edit dataset.

- under state In the field, change the data type to State.

- choose create Interactive sheet selected.

- visual type, filled map

- Select. state and probability

- under rice field wellchoose probability and change totalling to average and Show as to percent.

- choose filter and add the filter Loan status include paid in full Loan only. choose apply.

- At the top right of the blue banner, share and Publishing a dashboard.

- We’re using the name Average Probability of Paying Off Loans by State, but feel free to use your own name.

- choose Publishing a dashboard That’s it! You will be able to share this dashboard and forecasts with other analysts and other consumers of this data.

- Navigate to the QuickSight console by typing “QuickSight” in the console service search bar and select it quick sight.

cleaning

To avoid additional charges to your account, please follow these steps:

- Sign out of SageMaker Canvas

- In the AWS console, delete the CloudFormation stack that you launched earlier in this post.

conclusion

By integrating our cloud data warehouse (Amazon Redshift) with SageMaker Canvas, we can create more robust ML solutions for your business faster, without the need to move data, and without ML experience. I believe that the door will open for you.

Business analysts can now generate valuable business insights, and data scientists and ML engineers can help refine, tune, and extend models as needed. The integration between SageMaker Canvas and Amazon Redshift provides an integrated environment for building and deploying machine learning models, allowing you to use your data and You can now focus on creating value.

Additional materials:

- SageMaker Canvas Workshop

- re:Invent 2022 – SageMaker Canvas

- Hands-on course for business analysts – Practical decision making using no-code ML on AWS

About the author

Suresh Putnam Principal Sales Specialist for AI/ML and Generative AI at AWS. He is passionate about helping companies of all sizes transform into rapidly changing digital organizations focused on data, AI/ML, and generative AI.

Suresh Putnam Principal Sales Specialist for AI/ML and Generative AI at AWS. He is passionate about helping companies of all sizes transform into rapidly changing digital organizations focused on data, AI/ML, and generative AI.

Sohaib Katariwala He is a Senior Specialist Solutions Architect at AWS, focusing on Amazon OpenSearch Service. His interests lie in data and analysis in general. Specifically, we are passionate about helping customers use AI in their data strategies to solve modern challenges.

Sohaib Katariwala He is a Senior Specialist Solutions Architect at AWS, focusing on Amazon OpenSearch Service. His interests lie in data and analysis in general. Specifically, we are passionate about helping customers use AI in their data strategies to solve modern challenges.

michael hamilton I am an Analytics and AI Specialist Solutions Architect at AWS. He is interested in all things data-related and enjoys helping customers solve complex use cases.

michael hamilton I am an Analytics and AI Specialist Solutions Architect at AWS. He is interested in all things data-related and enjoys helping customers solve complex use cases.

Nabil Ezharhouni I am an AI/ML and Generative AI Solutions Architect at AWS. He is based in Austin, Texas and is passionate about cloud, AI/ML technologies, and product management. When I’m not working, I enjoy spending time with my family and finding the best tacos in Texas. Because… why?

Nabil Ezharhouni I am an AI/ML and Generative AI Solutions Architect at AWS. He is based in Austin, Texas and is passionate about cloud, AI/ML technologies, and product management. When I’m not working, I enjoy spending time with my family and finding the best tacos in Texas. Because… why?

1 Comment

Woah! I’m really enjoying the template/theme of this website.

It’s simple, yet effective. A lot of times it’s very

hard to get that “perfect balance” between user friendliness and

visual appearance. I must say you’ve done a great job with this.

Additionally, the blog loads extremely quick for me on Safari.

Excellent Blog!