Large-scale language models (LLMs) are very large deep learning models that are pre-trained on vast amounts of data. LLMs are very flexible. One model can perform completely different tasks, such as answering questions, summarizing documents, translating languages, and completing sentences. An LLM has the potential to revolutionize content creation and the way you use search engines and virtual assistants. Search Augmentation Generation (RAG) is the process of optimizing the output of an LLM by consulting an authoritative knowledge base external to the training data source before generating the response. While LLMs are trained on vast amounts of data and use billions of parameters to produce original outputs, RAGs can leverage the already powerful capabilities of LLMs for specific domains without retraining the LLMs. or extend to your organization’s internal knowledge base. RAG is a fast and cost-effective approach to improving LLM output and keeping it relevant, accurate, and useful in a given context. RAG introduces an information retrieval component that uses user input to initially retrieve information from a new data source. This new data, which comes from outside the LLM’s original training data set, external data. Data can exist in many different formats, including files, database records, and long-form text. AI technology called Embedding a language model Convert this external data to a numeric representation and store it in a vector database. This process creates a knowledge library that generative AI models can understand.

RAG introduces additional data engineering requirements.

- A scalable search index must ingest a large corpus of text that covers the desired knowledge domain.

- To enable semantic search during inference, data must be preprocessed. This includes normalization, vectorization, and index optimization.

- These indexes continually accumulate documents. Data pipelines must seamlessly integrate new data at scale.

- The diversity of data increases the need for customizable cleaning and transformation logic to deal with the quirks of different sources.

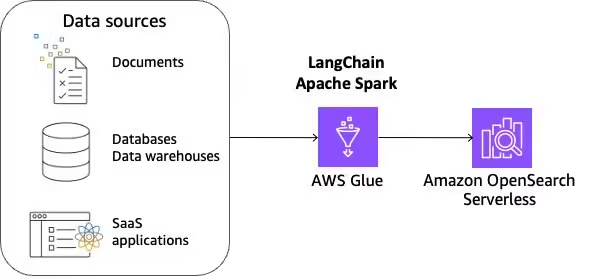

In this post, we explore how to build a reusable RAG data pipeline on LangChain, an open source framework for building applications based on LLM, and integrate it with AWS Glue and Amazon OpenSearch Serverless. Masu. The final solution is a reference architecture for scalable RAG indexing and deployment. We provide sample notebooks covering ingestion, transformation, vectorization, and index management to help teams create high-performance RAG applications with heterogeneous data.

RAG data preprocessing

Data preprocessing is important for responsibly retrieving from external data using RAG. Clean, high-quality data yields more accurate results in RAG, but privacy and ethical considerations require careful data filtering. This lays the foundation for LLM with RAG to reach its full potential in downstream applications.

To be able to effectively retrieve external data, it is common to first clean and sanitize documents. You can use Amazon Comprehend or AWS Glue’s sensitive data detection capabilities to identify sensitive data, and use Spark to clean and sanitize the data. The next step is to break the document into manageable chunks. The chunks are then converted to embeddings and written to a vector index while preserving the mapping to the original document. This process is illustrated in the following diagram. These embeddings are used to determine the semantic similarity between the query and the text from the data source.

Solution overview

This solution uses LangChain integrated with AWS Glue for Apache Spark and Amazon OpenSearch Serverless. To make this solution scalable and customizable, we use the distributed capabilities of Apache Spark and the flexible scripting capabilities of PySpark. We use OpenSearch Serverless as a sample vector store and use the Llama 3.1 model.

The advantages of this solution are:

- In addition to chunking and padding, you have the flexibility to clean, sanitize, and control data quality.

- You can build and manage incremental data pipelines to update embeddings on Vectorstore at scale.

- You can choose from various embedding models.

- You can choose from a variety of data sources, including databases, data warehouses, and SaaS applications supported by AWS Glue.

This solution covers the following areas:

- Processing unstructured data such as HTML, Markdown, and text files using Apache Spark. This includes cleaning, sanitizing, chunking distributed data, and embedding vectors for downstream consumption.

- Put everything together in a Spark pipeline to incrementally process your sources and publish your vectors to OpenSearch Serverless.

- Query indexed content using the LLM model of your choice and provide answers in natural language.

Prerequisites

Before you can continue with this tutorial, you need to create the following AWS resources.

- Amazon Simple Storage Service (Amazon S3) bucket for storing data

- An AWS Identity and Access Management (IAM) role for your AWS Glue notebook, as described in Setting up IAM Permissions for AWS Glue Studio. Requires IAM permissions for OpenSearch Service Serverless. Here is an example policy:

To start an AWS Glue Studio notebook, follow these steps.

- Download the Jupyter Notebook file.

- In the AWS Glue console, choosenotes in the navigation pane.

- under Create a jobselect notes.

- for optionchoose Upload notebook.

- choose Create a notebook. The notebook will start within a minute.

- Run the first two cells to set up an AWS Glue interactive session.

You now have the necessary settings for your AWS Glue notebook.

Set up a vector store

First, create a vector store. Vector stores provide efficient vector similarity searches by providing specialized indexes. RAG complements LLM with an external knowledge base, typically built using a vector database that incorporates vector-encoded knowledge articles.

This example uses Amazon OpenSearch Serverless for its simplicity and scalability to support vector searches of up to billions of vectors with low latency. For more information, see Amazon OpenSearch Service’s vector database feature description.

To set up OpenSearch Serverless, follow these steps:

- For the cell below Vector store setupexchange <あなたのiamの役割-arn> Replace with the Amazon Resource Name (ARN) of your IAM role. <地域> Run the cell by specifying an AWS Region.

- Run the following cells to create the OpenSearch serverless collection, security policy, and access policy.

OpenSearch Serverless has been successfully provisioned. You are now ready to insert your document into the vector store.

Preparation of documents

This example uses a sample HTML file as the HTML input. This is an article with specialized content that cannot be answered in LLM without using RAG.

- Run the cell below Download sample document Download the HTML file, create a new S3 bucket, and upload the HTML file to that bucket.

- Run the cell below Preparation of documents. Load the HTML file into Spark DataFrame df_html.

- Run the following two cells. Parse and clean up HTMLdefine a function

parse_htmlandformat_md. Use Beautiful Soup to parse the HTML and convert it to Markdown using markdownify to use MarkdownTextSplitter for chunking. These functions are later used within Spark Python user-defined functions (UDFs) in cells.

- Run the cell below Chunking HTML. This example uses LangChain

MarkdownTextSplitterDivide text into manageable chunks along markdown headings. Adjusting chunk size and overlap is important to prevent disruption of contextual meaning that can affect the accuracy of subsequent vector store searches. This example uses a chunk size of 1,000 and a chunk overlap of 100 to maintain information continuity, but these settings can be fine-tuned for different use cases.

- Run the following three cells. embedded. The first two cells configure LLM and deploy it through Amazon SageMaker. In the third cell, the function

process_batchinjectsDocuments are stored in the vector store via the OpenSearch implementation within LangChain, and the embedded model and document are populated to create the entire vector store.

- Run the following two cells. Preprocessing HTML documents. The first cell defines a Spark UDF, and the second cell triggers a Spark action to run the UDF for each record that contains the entire HTML content.

You have successfully populated your OpenSearch serverless collection with embedding.

Q&A

This section demonstrates question answering functionality using the embeddings captured in the previous section.

- Run the following two cells. Answers to questions to create

OpenSearchVectorSearchThe client uses LLM using Llama 3.1 and defines a RetrievalQA that allows you to customize how retrieved documents are added to the prompt.chain_typeOptionally, you can select other foundation models (FM). In this case, please refer to the model card and adjust the chunk length.

- Run the following cell to perform a similarity search using the query “What is task decomposition?” For vector stores that provide the most relevant information. It will take a few seconds before the document is available in the index. If you see empty output in the next cell, wait 1-3 minutes and try again.

Now that we have the relevant documentation, we will use LLM to generate an answer based on the embedding.

- Run the following cell to call the LLM and generate an answer based on the embeddings.

As you might expect, the LLM gave us a detailed explanation of task decomposition. For production workloads, it is important to balance latency and cost efficiency for semantic search over vector stores. As detailed in this post, it is important to choose the k-NN algorithm and parameters that best suit your specific needs. Additionally, consider using product quantization (PQ) to reduce the dimensionality of the embeddings stored in the vector database. This approach may come with some trade-off in accuracy, but is advantageous for latency-sensitive tasks. For more information, see Choosing a k-NN algorithm for a billion-scale use case using OpenSearch.

cleaning

Now move on to the final step of cleaning up your resources.

- Run the cell below cleaning Delete S3, OpenSearch Serverless, and SageMaker resources.

- Delete an AWS Glue notebook job.

conclusion

This post described a reusable RAG data pipeline using LangChain, AWS Glue, Apache Spark, Amazon SageMaker JumpStart, and Amazon OpenSearch Serverless. This solution provides a reference architecture for ingesting, transforming, vectorizing, and managing RAG indexes at scale using the distributed capabilities of Apache Spark and the flexible scripting capabilities of PySpark. This allows preprocessing of external data in phases such as cleaning, sanitizing, chunking the document, generating vector embeddings for each chunk, and loading into the vector store.

About the author

Noritaka Sekiyama is a Principal Big Data Architect on the AWS Glue team. He is responsible for building software deliverables that assist customers. In my free time, I enjoy cycling on my road bike.

Noritaka Sekiyama is a Principal Big Data Architect on the AWS Glue team. He is responsible for building software deliverables that assist customers. In my free time, I enjoy cycling on my road bike.

Akito Takeki I’m a Cloud Support Engineer at Amazon Web Services. He specializes in Amazon Bedrock and Amazon SageMaker. In my free time, I enjoy traveling and spending time with my family.

Akito Takeki I’m a Cloud Support Engineer at Amazon Web Services. He specializes in Amazon Bedrock and Amazon SageMaker. In my free time, I enjoy traveling and spending time with my family.

Ray Wang I am a Senior Solutions Architect at Amazon Web Services. Ray is dedicated to building modern solutions on the cloud, especially NoSQL, big data, and machine learning. As a voracious go-getter, he passed all 12 AWS certifications, broadening his technical field as well as deepening it. He loves reading and watching science fiction movies in his free time.

Ray Wang I am a Senior Solutions Architect at Amazon Web Services. Ray is dedicated to building modern solutions on the cloud, especially NoSQL, big data, and machine learning. As a voracious go-getter, he passed all 12 AWS certifications, broadening his technical field as well as deepening it. He loves reading and watching science fiction movies in his free time.

Vishal Kajam is a software development engineer on the AWS Glue team. He is passionate about distributed computing and using ML/AI to design and build end-to-end solutions for customers’ data integration needs. In my free time, I enjoy spending time with my family and friends.

Vishal Kajam is a software development engineer on the AWS Glue team. He is passionate about distributed computing and using ML/AI to design and build end-to-end solutions for customers’ data integration needs. In my free time, I enjoy spending time with my family and friends.

Savio D’Souza is a software development manager on the AWS Glue team. His team works on generative AI applications for the data integration domain and distributed systems to efficiently manage data lakes on AWS and optimize Apache Spark for performance and reliability.

Savio D’Souza is a software development manager on the AWS Glue team. His team works on generative AI applications for the data integration domain and distributed systems to efficiently manage data lakes on AWS and optimize Apache Spark for performance and reliability.

Kinshuk Pahale is a Principal Product Manager at AWS Glue. He leads a team of product managers focused on the AWS Glue platform, developer experiences, data processing engines, and generative AI. He had been with AWS for four and a half years. Previously, he held product management positions at Proofpoint and Cisco.

Kinshuk Pahale is a Principal Product Manager at AWS Glue. He leads a team of product managers focused on the AWS Glue platform, developer experiences, data processing engines, and generative AI. He had been with AWS for four and a half years. Previously, he held product management positions at Proofpoint and Cisco.